Today, it was the 4th International Workshop on Utility-Driven Mining and Learning (UDML 2021), held at the IEEE ICDM 2021 conference. I am a co-organizer of the workshop and will give a brief report about it.

What is UDML?

UDML is a workshop that has been held for the last four years. It was first held at the KDD 2018 conference, and then at the ICDM 20119, ICDM 2020 and ICDM 2021 conference.

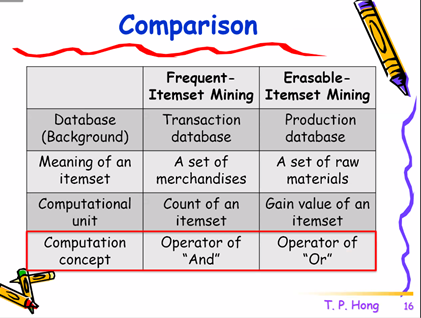

The focus of the UDML workshop is how to integrate the concept of utility in data mining and machine learning. Utility is a broad concept that represents the importance or value of patterns or models. For instance, in the context of analyzing customer data, the utility may represent the profit made by sales of products, while in the context of a multi-agent system, utility may be a measure of how desirable a state is. For many machine learning or data mining problems, it is desirable to find patterns that have a high utility or models that optimize some utility functions. This is the core topic of this workshop. But the workshop is also open to other related topics. For example, most pattern mining papers can fit the the scope of the workshop as utility can take a more broad interpreation of finding interesting patterns.

The program

UDML 2021 was held online due to the COVID pandemic. We had a great program with an invited keynote talk and 8 accepted papers selected from about 14 submissions. All papers were reviewed by several reviewers. The papers are published in the IEEE ICDM Workshop proceedings, which ensures a good visibility. Besides, a special issue in the journal of Intelligent Data Analysis was announced for extended versions of the workshop papers.

Keynote talk by Prof. Tzung-Pei Hong

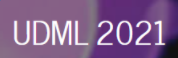

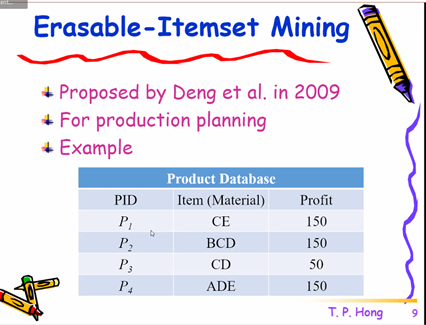

The first part of the workshop was the keynote by Prof. Tzung-Pei Hong from National University of Kaohsiung, who kindly accepted to give a talk. The talk was very interesting. Basically, Prof. Hong has shown that the problem of erasable itemset mining can be converted to the problem of frequent itemset mining and that the opposite is also possible. This implies that one can simply reuse the very efficient itemset mining algorithms with some small modifications to solve the problem of erasable itemset mining. The details about how to convert one problem to the other were presented. Besides, some experimental comparison was presented using the Apriori algorithm (for frequent itemset mining) and the META algorithm (for erasable itemset mining). It was found that META is faster for smaller or more sparse databases but that in other cases, Apriori was faster.

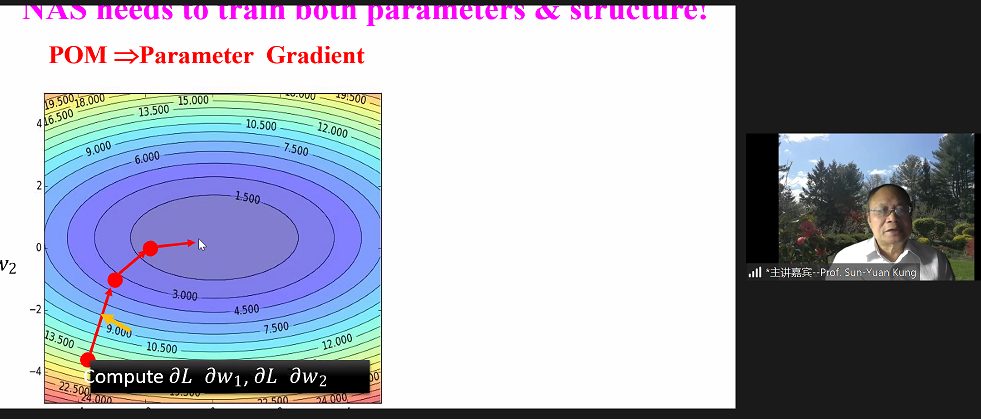

Here is a few slides from this presentation:

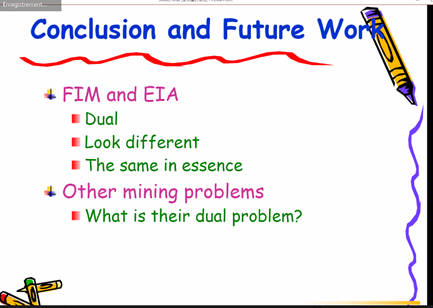

Paper presentations

Eight paper were presented:

| Paper ID: DM368, Md. Tanvir Alam, Amit Roy, Chowdhury Farhan Ahmed, Md. Ashraful Islam, and Carson Leung, “Mining High Utility Subgraphs“ |

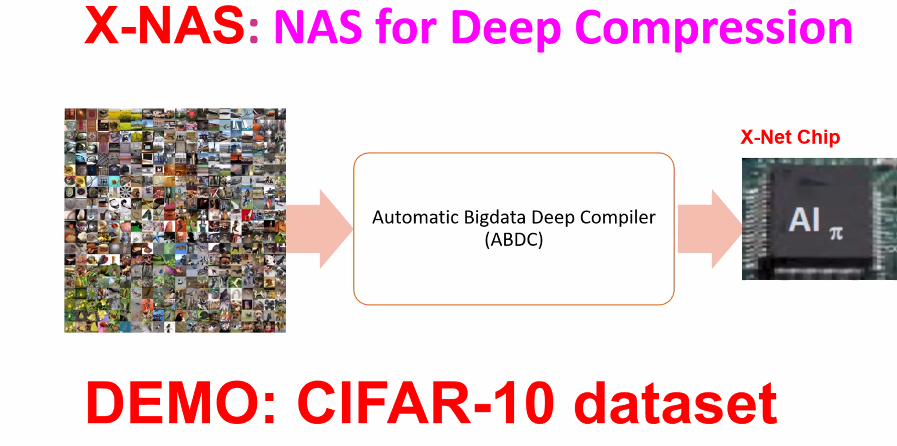

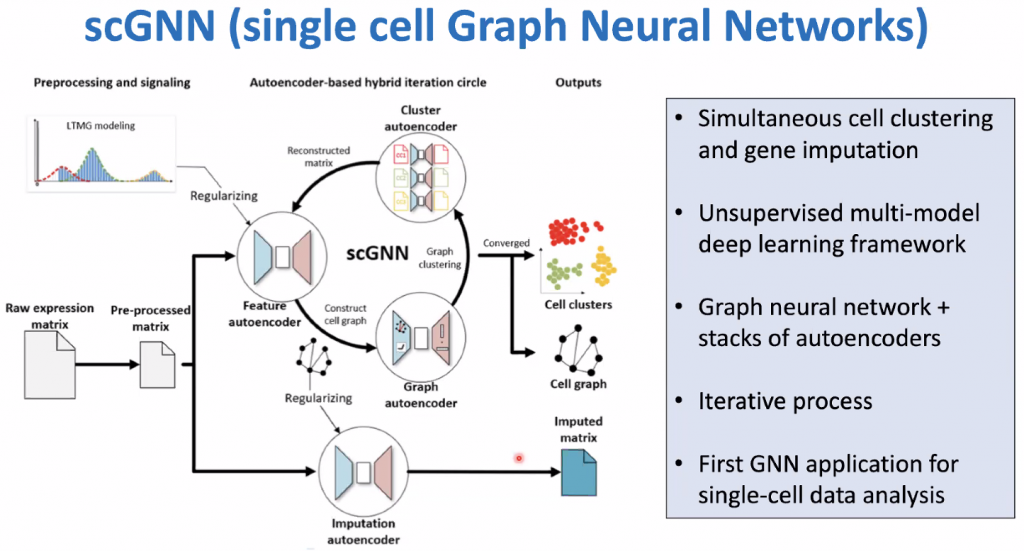

| Paper ID: S10201, Cedric Kulbach and Steffen Thoma, “Personalized Neural Architecture Search” (best paper award ) |

| Paper ID: S10213, Uday Kiran Rage, Koji Zettsu, “A Unified Framework to Discover Partial Periodic-Frequent Patterns in Row and Columnar Temporal Databases“ |

| Paper ID: S10210, Wei Song, Caiyu Fang, and Wensheng Gan, “TopUMS: top-k utility mining in stream data“ |

| Paper ID: S10211, Mourad Nouioua, Philippe Fournier-Viger, Jun-Feng Qu, Jerry Chun-Wei Lin, Wensheng Gan, and Wei Song, “CHUQI-Miner: Mining Correlated Quantitative High Utility Itemsets“ |

| Paper ID: S10209, Chi-Jen Wu, Wei-Sheng Zeng, and Jan-Ming Ho, “Optimal Segmented Linear Regression for Financial Time Series Segmentation“ |

| Paper ID: S10202, Jerry Chun-Wei Lin, Youcef Djenouri, Gautam Srivastava, and Jimmy Ming-Tai Wu, “Large-Scale Closed High-Utility Itemset Mining“ |

| Paper ID: S10203, Yangming Chen, Philippe Fournier-Viger, Farid Nouioua, and Youxi Wu, “Sequence Prediction using Partially-Ordered Episode Rules“ |

These papers cover various topic in pattern mining such as high utility itemset mining, subgraph mining, high utility quantitative itemset mining and periodic pattern mining but also about some machine learning topics such as for linear regression and neural networks.

Best paper award of UDML 2021

This year, a best paper award was given. The paper was selected based on the review scores and a discussion among the organizers. The recipient of the award is the paper “Personalized Neural Architecture Search“, which presented an approach to search for a good neural network architecture that optimize some criteria (in other words, a form of utility).

Conclusion

That was the brief overview about UDML 2021. The workshop was quite successful. Thus, we plan to organize the UDML workshop again next year, likely at the ICDM conference, but we are also considering KDD as another possibility. There will also be another special issue for next year’s workshop.

—

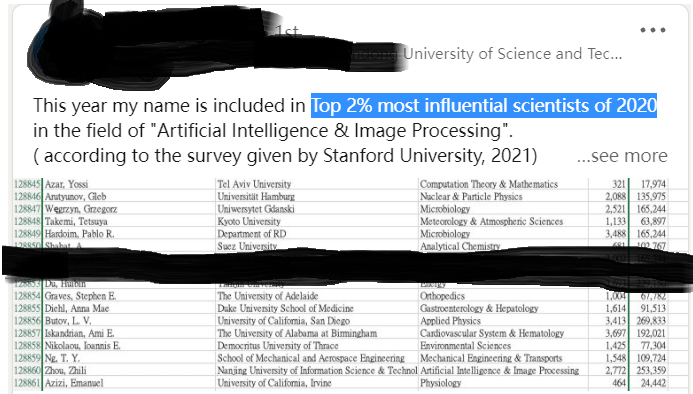

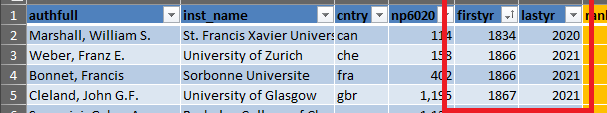

Philippe Fournier-Viger is a full professor working in China and founder of the SPMF open source data mining software.