In this post, I want to talk about a special session on Knowledge Science and Intelligent Computing (KSIC) that I am co-organizing this year at the SOMET 2026 conference (25th Int. Conf. on Intelligent Software Methodologies, Tools, and Techniques). I would like to invite you to submit your research papers!

The conference proceedings will be published by IOS Press and indexed in SCOPUS. The important dates are:

- Deadline: March 16, 2026 (will maybe be extended)

- Notification to authors: May 10, 2026.

- Camera-Ready papers: June 15, 2026.

Relevant topics include, but are not limited to,

the following:

- Knowledge Reasoning and Representation

- Knowledge-based software engineering.

- Knowledge Representation and Reasoning

- Knowledge engineering application.

- Ontological engineering.

- Symbolic reasoning in Large Language Models

- Reality automated generation

- Cognitive foundations of knowledge

- Intelligent systems.

- Intelligent Information Systems.

- Robotics and Cybernetics.

- Distributed and Parallel Processing.

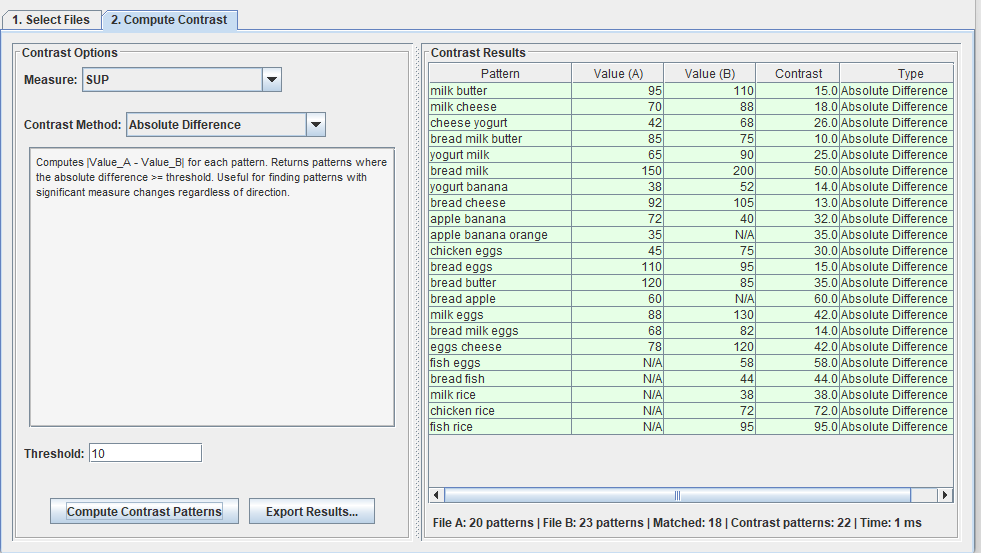

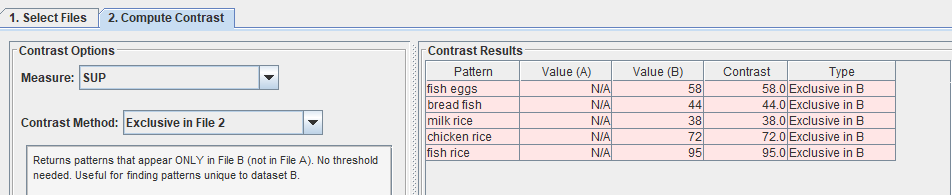

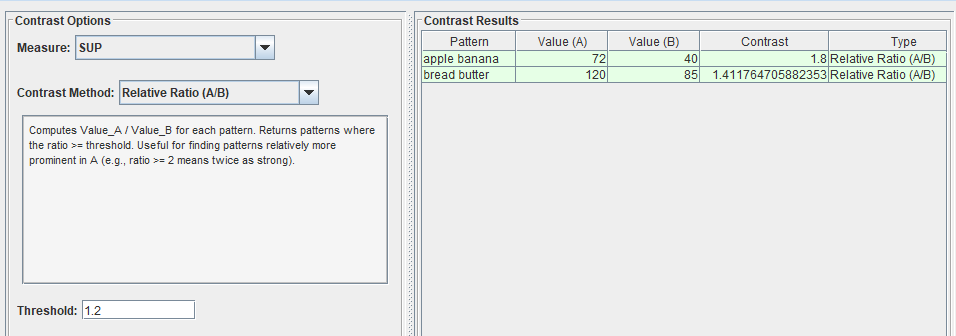

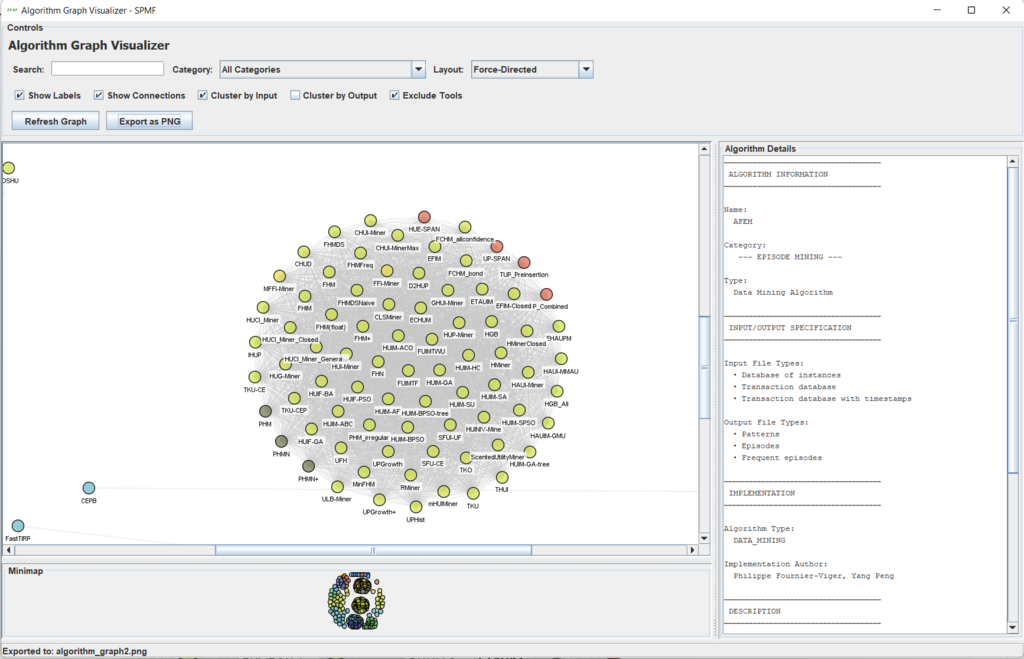

- Aspects of Data Mining.

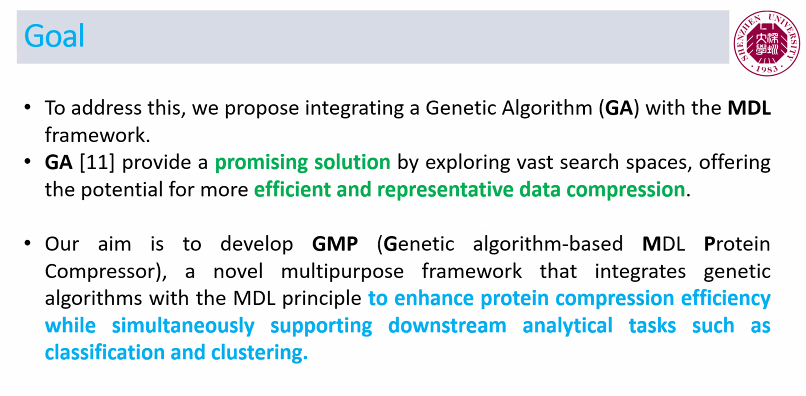

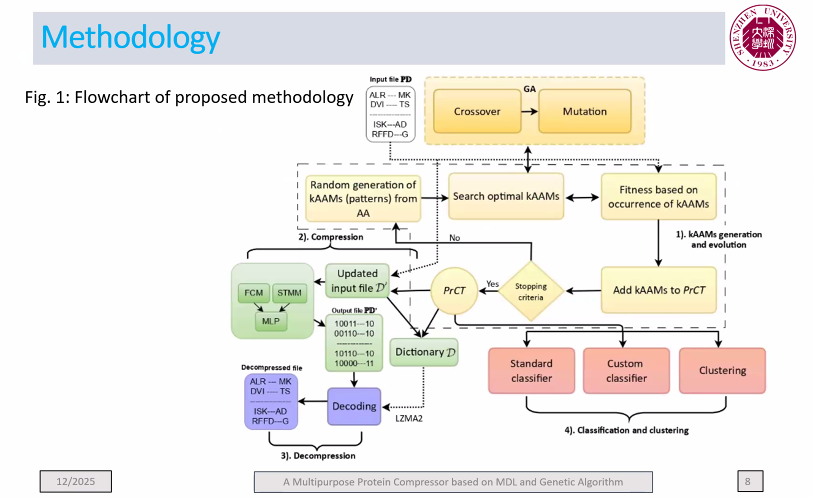

- Bio-informatics.

- Knowledge extraction from text, video, signals and images.

- Search and Mining of variety of data including scientific and engineering, social, sensor/IoT/IoE.

- Intelligent Computational Modeling.

- Mobility and Big Data.

Session Organizers

▪ Nhon V. Do, Hong Bang International University,

Vietnam.

▪ Philippe Fournier-Viger, Shenzhen University, China.

▪ Hien D. Nguyen, University of Information Technology,

VNU-HCM, Vietnam.

For more information about the special session and conference, click here.