CSRankings is a popular website that provides a ranking of computer science departments around the world. The website can be found at: https://csrankings.org/ In this blog post, I will talk about this ranking and some of its shortcomings. Of course, no ranking is perfect, and what I write below is just my personal opinion.

What is CSRankings?

First, it needs to be said that there exist many rankings to evaluate computer science departments, and they use various criteria based on teaching, research or a combination of both. CSRankings is purely focused on research. It evaluates a department based on its research output in terms of articles in the very top level conferences. A good thing about that ranking is that the ranking algorithm is completely transparent and well explained: (1) it uses public data, and (2) the code to assign a score to each department is explained and is also open-source.

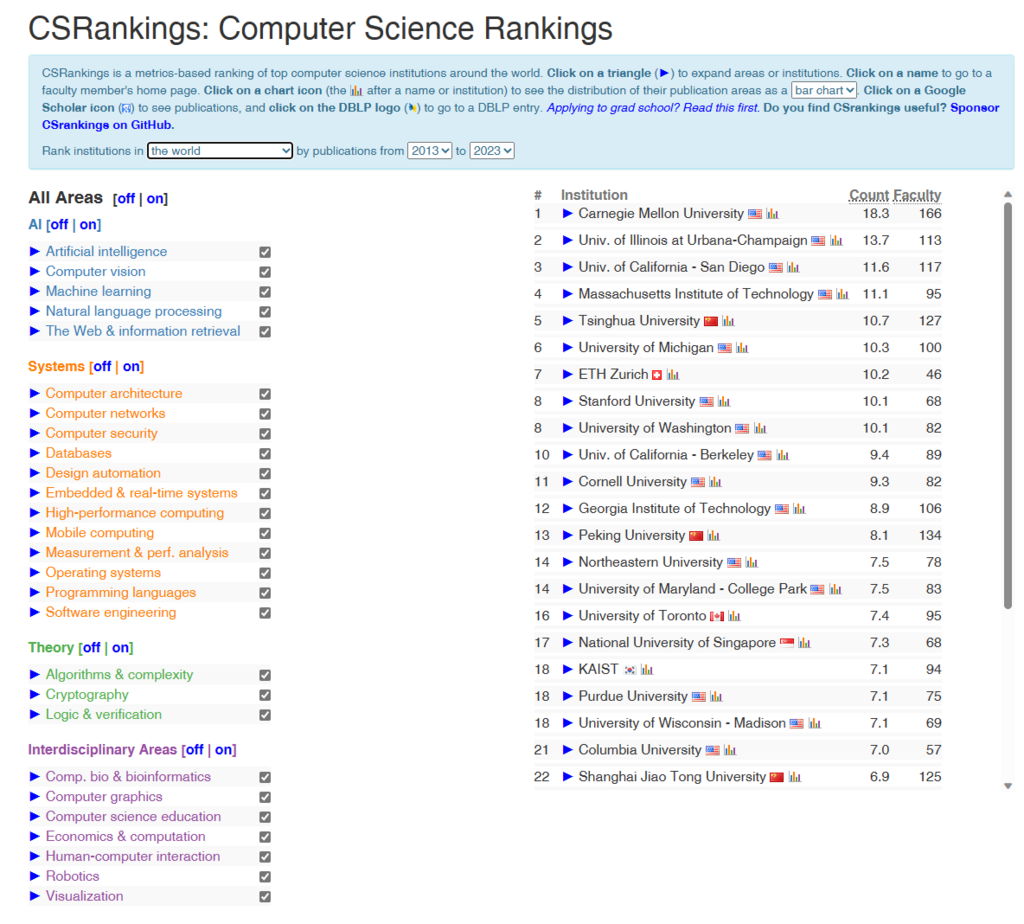

The ranking looks like this:

Shortcomings of CSRankings

Now, let’s talk about what I see are the main shortcomings of CSRankings:

1) The ranking ignores journal papers to focus only on conference papers but in several countries, journals are deemed more important than conference publications. Thus, there is a bias there.

2) It is a US-centric ranking. As explained in the FAQ of CSRankings, a conference is only included in this ranking if at least 50 R1 US universities have published in it during the last 10 years.

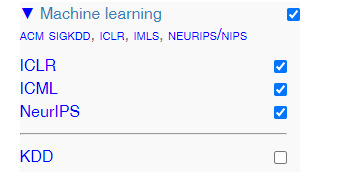

3) Some sub-fields of computer science are not well-represented and some conferences appear to be easier to publish than others. For example, from my perspective, I am a data mining researcher and KDD is arguably the top conference in my field. KDD is highly competitive with thousands of submissions, and generally an acceptance rate around 10-20%, but it is deactivated by default from CSRankings:

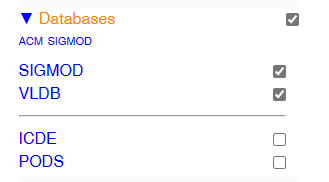

. But I also notice that most top data mining conferences are not included either like ICDM, CIKM etc. ICDE is another data mining related conference with an acceptance rate of about 19%. It is there but it is also deactivated by default:

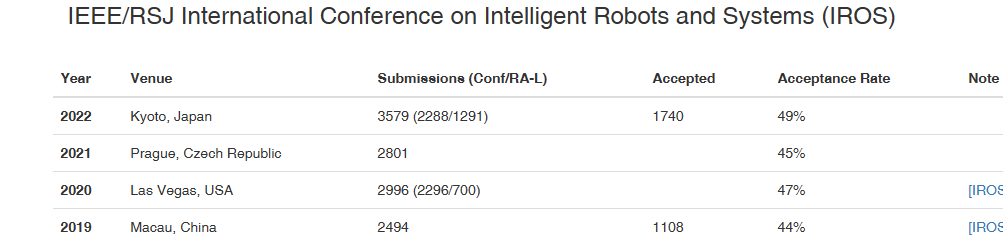

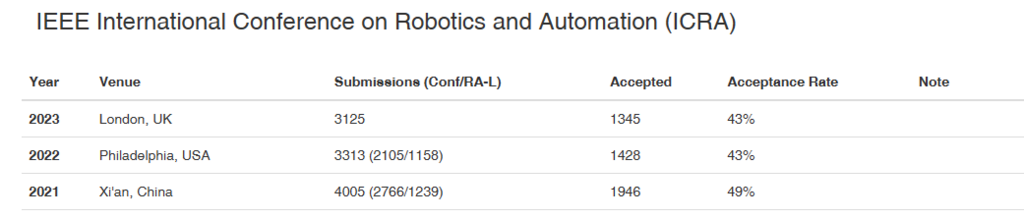

I find this quite surprising because for other fields, some conferences that are arguably easier to publish than ICDE and KDD are included in the ranking. For example, ICDE and KDD typically have acceptance rate in the range of 10-20%, while for robotics the IROS and ICRA conferences are included in the CSRankings, but they have a much higher acceptance rate. IROS has an acceptance rate of around 49 % and ICRA an acceptance rate of around 43 % as can be seen below (source https://staff.aist.go.jp/k.koide/acceptance-rate.html ):

Thus, it seems to me that the ranking is unequal for different fields. It seems that some fields have some conferences that are much easier to publish that are included in the ranking than some other fields. I think that this problem emerges due to the design decision of CSRankings to only include a about 3 conferences for each research areas and to define the research areas based on ACM Special Interest Groups.

4) It is a conservative ranking that focus on big conferences for popular research areas. It does not encourage researchers to publish in new conferences but rather to focus on well-established big conferences, as everything else does not count. It also does not encourage publishing in smaller conferences that might be more relevant. For example, while doing my PhD, I was working on intelligent tutoring systems, and the two top conferences in that field are Intelligent Tutoring Systems (ITS) and Artificial Intelligence In Education (AIED). These conferences are rather small and specific, so they are totally ignored from CSRanking. But those are the conferences that matters in that field.

5) By design, the ranking focuses only on research. But some other important aspects like teaching may be relevant to some people. For example, an undergraduate student may be interested in other aspects such as how likely he will find a job after graduating. In that case, other rankings should be used.

Conclusion

That was just a quick blog post to point out what I see as some shortcomings of the CSRankings. Of course, no ranking is perfect, and CSRankings still provide some useful information and can be useful. But in my opinion, I think it has some limitations. It seems to me that not all fields are equal in this ranking.

What do you think? Post your opinion in the comment section below.

—

Philippe Fournier-Viger is a full professor working in China and founder of the SPMF open source data mining software.

Pingback: CSRankings: still a biased ranking | The Data Blog