Today, I will discuss how to become a good researcher and what are the most important skills that a researcher should have. This blog post is aimed at young Master degree students and Ph.D students, to provide some useful advice to them.

1) Being humble and open to criticism

An important skill to be a good researcher is to be humble and to be able to listen to others. Even when a researcher works very hard and think that his/her project is “perfect”, there are always some flaws or some possibilities for improvement.

A humble researcher will listen to the feedback and opinions of other researchers on their work, whether this feedback is positive or negative, and will think about how to use this feedback to improve their work. A researcher that works alone can do an excellent work. But by discussing research with others, it is possible to get some new ideas. Also, when a researcher present his/her work to others, it is possible to better understand how people will view your work. For example, it is possible that other people will misunderstand your work because something is unclear. Thus, the researcher may need to make adjustments to his research project.

2) Building a social network

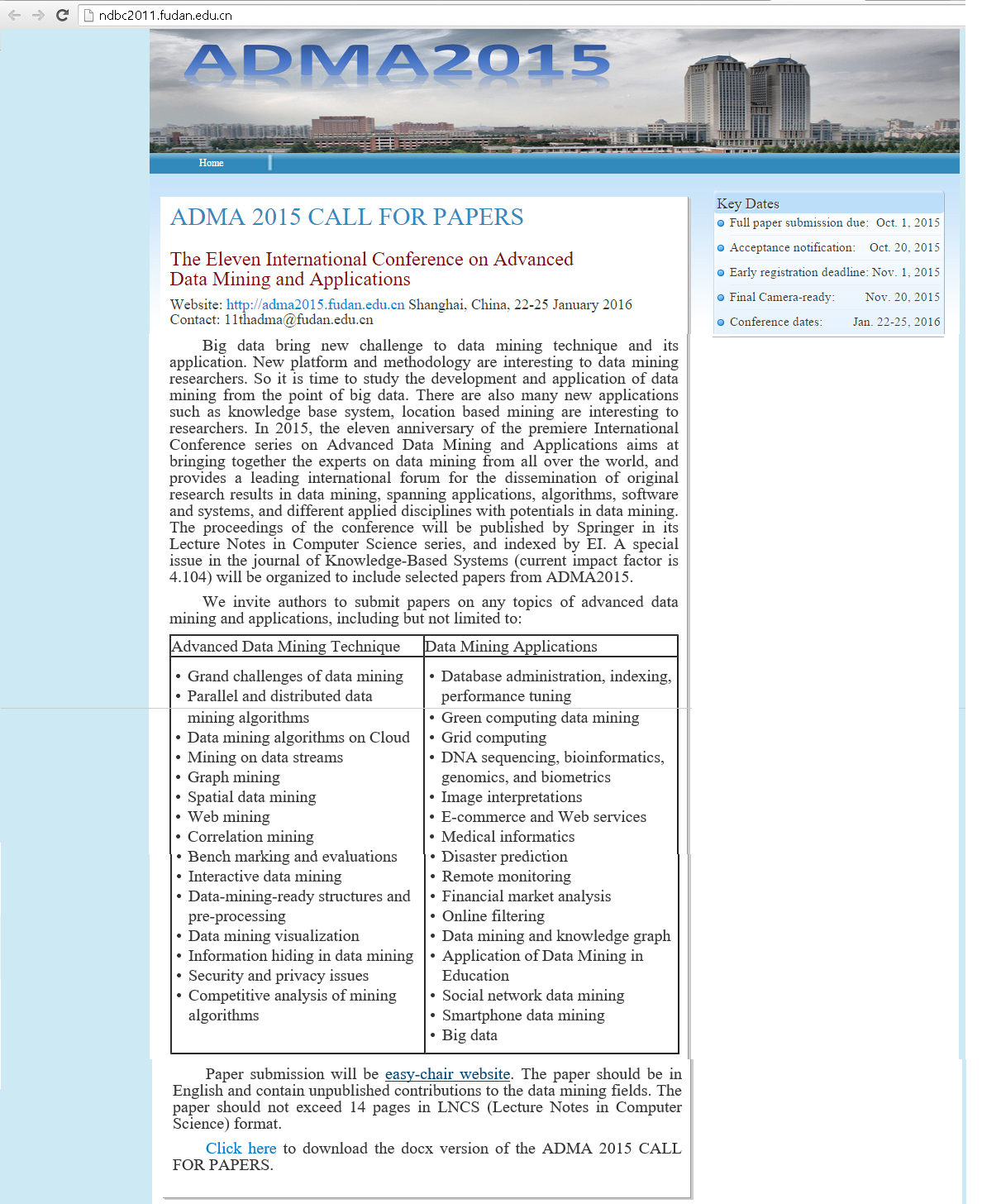

A second important thing to work on for young researchers is to try to build a social network. If a researcher has opportunities to attend international conferences, s/he should try to meet other students/professors to establish contact with other researchers. Other ways of establishing contact with other researchers are to send e-mails to ask questions or discuss research, or it could also be at a regional or national level by attending seminars at other universities.

Building a social network is very important as it can create many opportunities for collaborations. Besides, it can be useful to obtain a Ph.D position at another university (or abroad), a post-doctoral research position or even a lecturer position or professor position in the future, or to obtain some other benefits such as being invited to give a talk at another university or being part of the program committee of conferences and workshops. A young researcher has to often work by herself/himself. But he should also try to connect with other researchers.

For example, during my Ph.D. in Canada, I established contact with some researchers in Taiwan, and I then applied there for doing my postdoc. Then, I used some other contacts recently to find a professor position in China, where I then applied and got the job. Also, I have done many collaborations with other researchers that I have met at conferences.

3) Working hard, working smart

To become a good researcher, another important skill is to spend enough time on your project. In other words, a successful researcher will work hard. For example, it is quite common that good researchers will work more than 10 hours a day. But of course, it is not just about working hard, but also about working “smart”, that is a researcher should spend each minute of his time to do something useful that will make him/her advance toward his goals. Thus, working hard should be done also with a good planning.

When I was a MSc and Ph.D. student, I could easily work more than 12 hours a day. Sometimes, I would only take a few days off during the whole year. Currently, I still work very hard every day but I have to take a little it more time off due to having a family. However, I have gained in efficiency. Thus, even by working a bit less, I can be much more productive than I was a few years ago.

4) Having clear goals / being organized / having a good research plan

A researcher should also have clear goals. For a Ph.D or MSc student, this includes having a general goal of completing the thesis, but also some subgoals or milestones to attain his main goal. One should also try to set dates for achieving these goals. In particular, a student should also think about planning their work in terms of deadlines for conferences. It is not always easy to plan well. But it is a skill that one should try to develop. Finally, one should also choose his research topic(s) well to work on meaningful topics that will lead to making a good research contribution.

5) Stepping out of the comfort zone

A young researcher should not be afraid to step out of his comfort zone. This includes trying to meet other researchers, trying to establish collaborations with other researchers, trying to learn new ideas or explore new and difficult topics, and also to study abroad.

For example, after finishing my Ph.D. in Canada, which was mostly related to e-learning, I decided to work on the design of fundamental data mining algorithms for my post-doctoral studies and to do this in Taiwan in a data mining lab. This was a major change both in terms of research area but also in terms of country. This has helped me to build some new connections and also to work in a more popular research area, to have more chance of obtaining a professor position, thereafter. This was risky, but I successfully made the transition. Then, after my postdoc I got a professor job in Canada in a university far away from my hometown. This was a compromise that I had to make to be able to get a professor position since there are very few professor positions available in Canada (maybe only 5 that I could apply for every year). Then, after working as a professor for 4 years in Canada, I decided to take another major step out of my comfort zone by selling my house and accepting a professor job at a top 9 university in China. This last move was very risky as I quit my good job in Canada where I was going to become permanent. Moreover, I did that before I actually signed the papers for my job in China. And also from a financial perspective I lost more than 20,000 $ by selling my house quickly to move out. However, the move to China has paid off, as in the next months, I got selected by a national program for young talents in China. Thus, I now receive about 10 times the funding that I had in Canada for my research, and my salary is more than twice my salary as a professor in Canada, thus covering all the money that I had lost by selling my house. Besides, I have been promoted to full professor and will lead a research center. This is an example of how one can create opportunities in his career by taking risks.

6) Having good writing skills

A young researcher should also try to improve his writing skills. This is very important for all researchers, because a researcher will have to write many publications during his career. Every minute that one spends on improving writing skills will pay off sooner or later.

In terms of writing skills, there are two types of skills.

- First, one should be good at writing in English without grammar and spelling errors.

- Second, one should be able to organize his ideas clearly and write a well-organized paper (no matter if it is written in English or another language). For a student, is important to work to improve these two skills during their MSc and Ph.D studies.

These skills are acquired by writing and reading papers, and spending the time to improve yourself when writing (for example by reading the grammar rules when unsure about grammar).

Personally, I am not a native English speaker. I have thus worked hard during my graduate studies to improve my English writing skills.

Conclusion

In this brief blog post, I gave some general advice about important skills for becoming a successful researcher. I you think that I have forgotten something, please post it as a comment below.

==

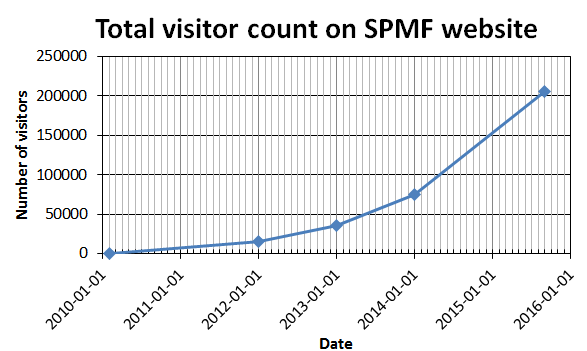

Philippe Fournier-Viger is a full professor and the founder of the open-source data mining software SPMF, offering more than 110 data mining algorithms. If you like this blog, you can tweet about it and/or subscribe to my twitter account @philfv to get notified about new posts.