Today, was the 6th International Workshop on Utility-Driven Mining and Learning (UDML 2024), held at the PAKDD 2024 conference. The workshop was a success. There was many people in attendance (around 20), which is good considering that PAKDD is not a very large conference and that there was about 6 workshops and tutorials running at the same time.

Keynote speech by Prof. Jian Pei

A highlight of the UDML workshop was the invited talk by Prof. Jian Pei from Duke University. He is a famous researcher in data science, which has made many very important contributions to the field. The talk was called “Data valuation in federated learning” and was very interesting. Prof. Pei first introduced the topic of federated learning, a popular topic, and explained that a key issue with many current models is that the monetary aspect is note taken into account. In fact, many researchers assume that different companies or organizations will want to share their data or collaborate to create models using federated learning but do not think that actors need a reward to do so, which could for example be in monetary form.

To solve this problem, his team proposed models for federated learning that would ensure some form of fairness and other desirable properties. This is just a brief summary of the idea of this talk. Here are a few slides from the presentation:

Research papers

This year, the UDML workshop was competitive with 23 submissions and 9 papers accepted. The papers were on various topic including machine learning, but mainly focused on pattern mining (high utility pattern mining, sequential pattern mining, itemset mining, and some applications).

The proceedings of the workshop can be downloaded from this page. Here is a few pictures from some of the speakers:

Best paper award

In the opening ceremony of UDML, it was announced that the best paper award of UDML was given to the following paper, which proposed a novel algorithm for finding co-location patterns in spatial data:

A detection of multi-level co-location patterns based on column calculation and DBSCAN clustering

Ting Yang, Lizhen Wang, Lihua Zhou and Hongmei Chen

Congratulations to the winners!

Group photo

At the end of the workshop, some photos were taken with some of the attendees

Conclusion

That was a brief overview of the UDML 2024 workshop for this year. In a follow-up blog post, I will try to talk to you more about the other activities of the PAKDD 2024 conference. PAKDD is a conference that is quite interesting especially for meeting researchers from the pacific-asia area from the data mining and machine learning community.

—

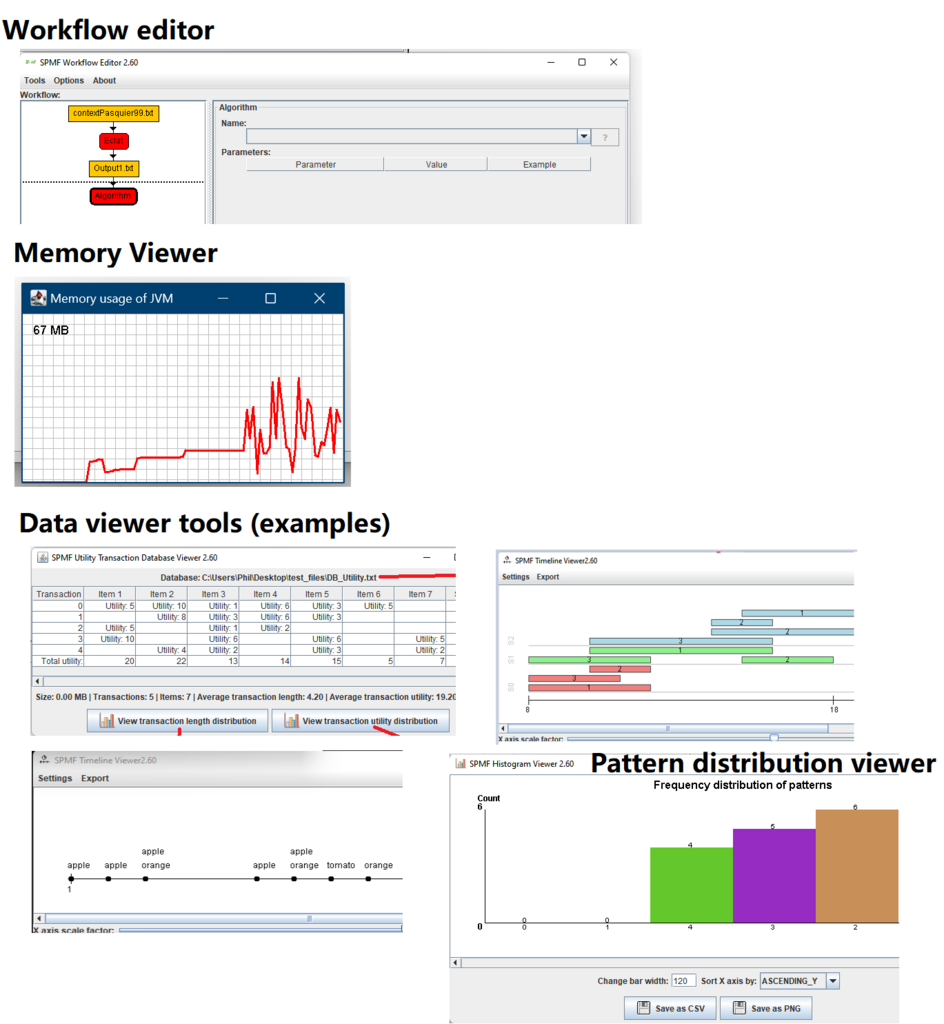

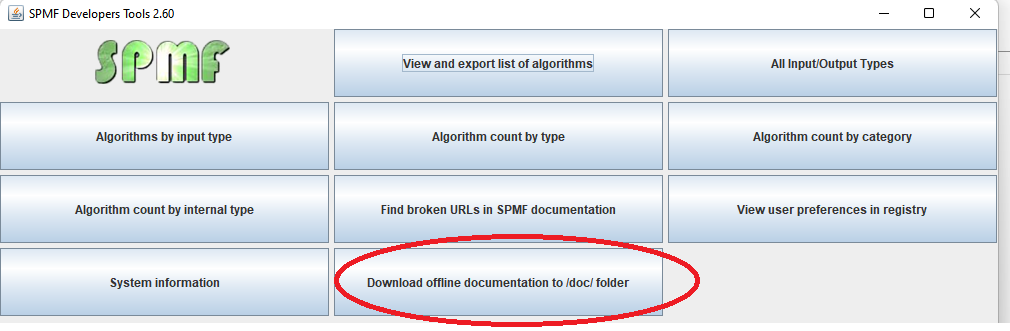

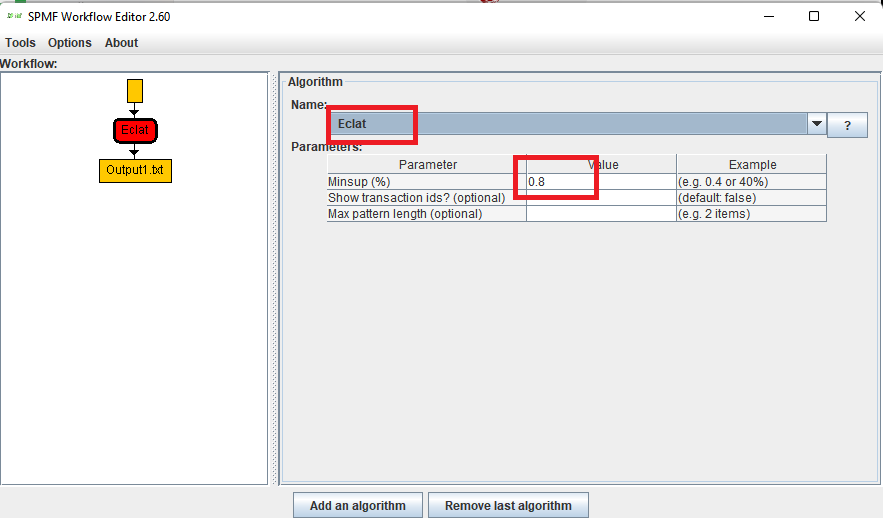

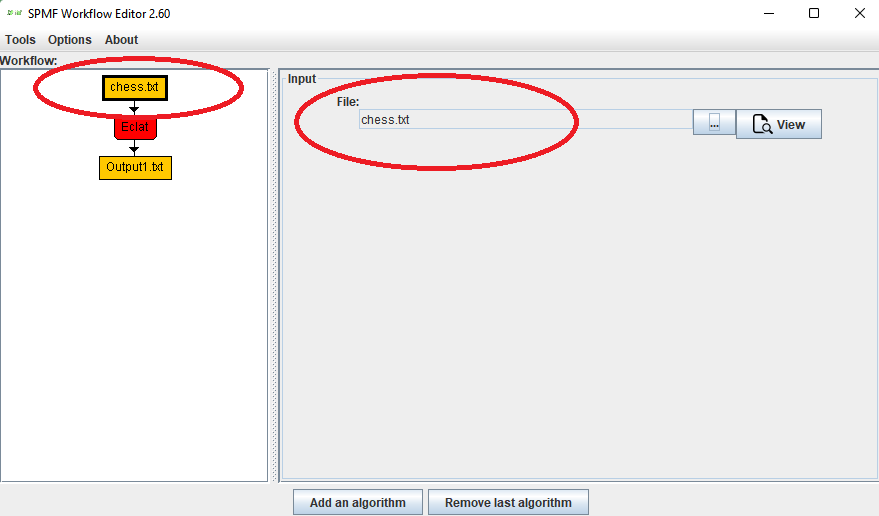

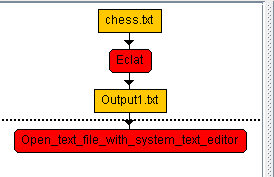

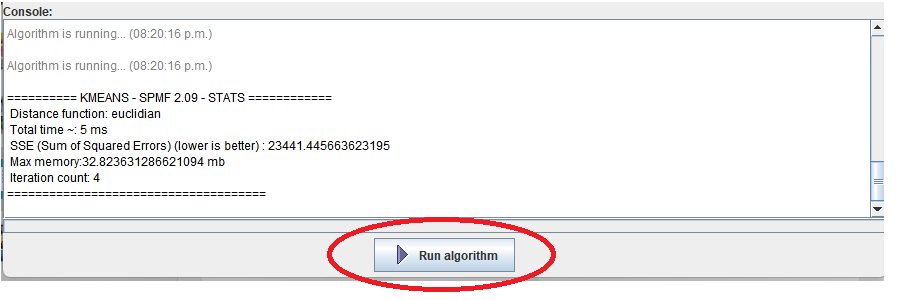

Philippe Fournier-Viger is a distinguished professor working in China and founder of the SPMF open source data mining software.