This week, I am attending the IEA AIE 2018 conference ( 31st International Conference on Industrial, Engineering & Other Applications of Applied Intelligent Systems) in Montreal, Canada.

About the conference

The IEA AIE conference is an international conference on artificial intelligence and related topics.

Conference opening

On Tuesday morning, it was the conference opening.

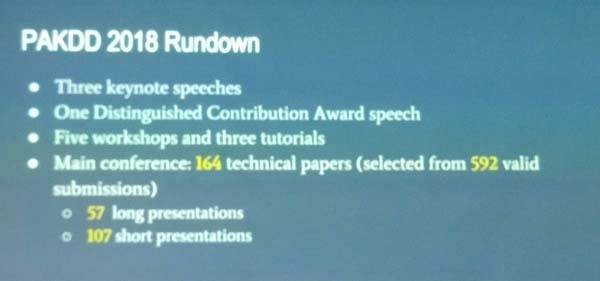

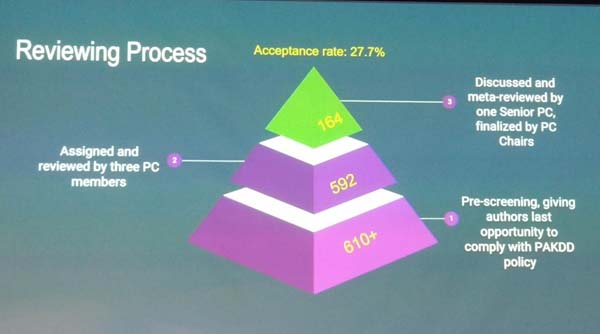

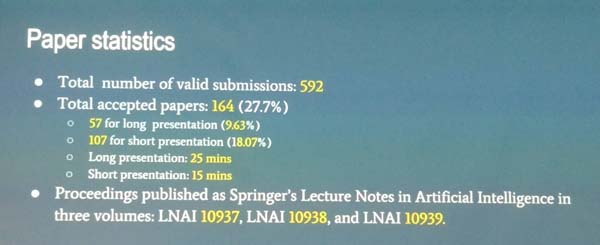

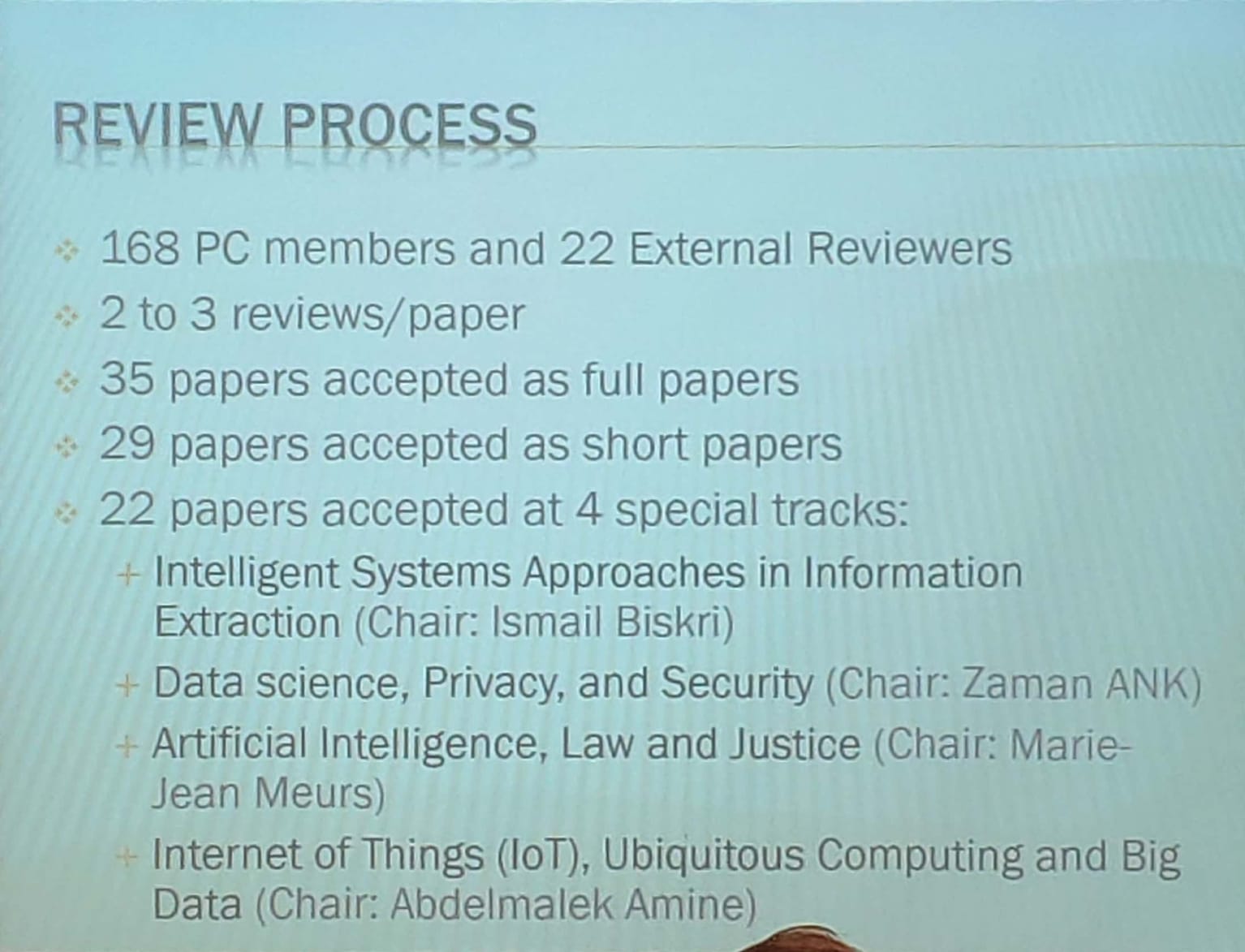

This year, 146 papers have been submitted from 44 countries. From those 35 papers had been accepted as long papers, and 29 as short paper, and 22 papers in some special tracks. Thus, the acceptance rate for long paper is about 23%, while the global acceptance rate is around 59 %. More details about the review process:

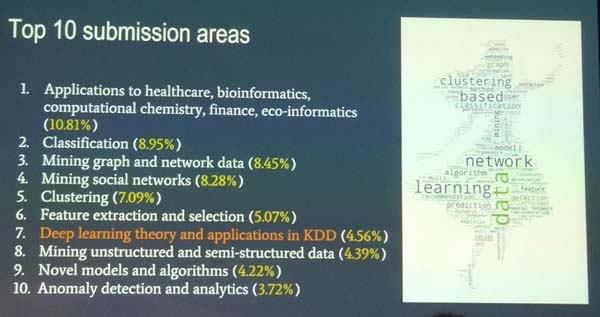

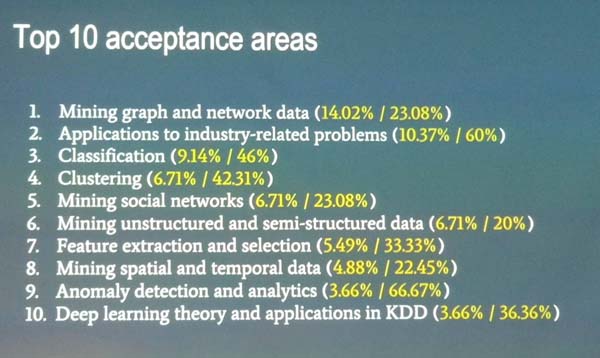

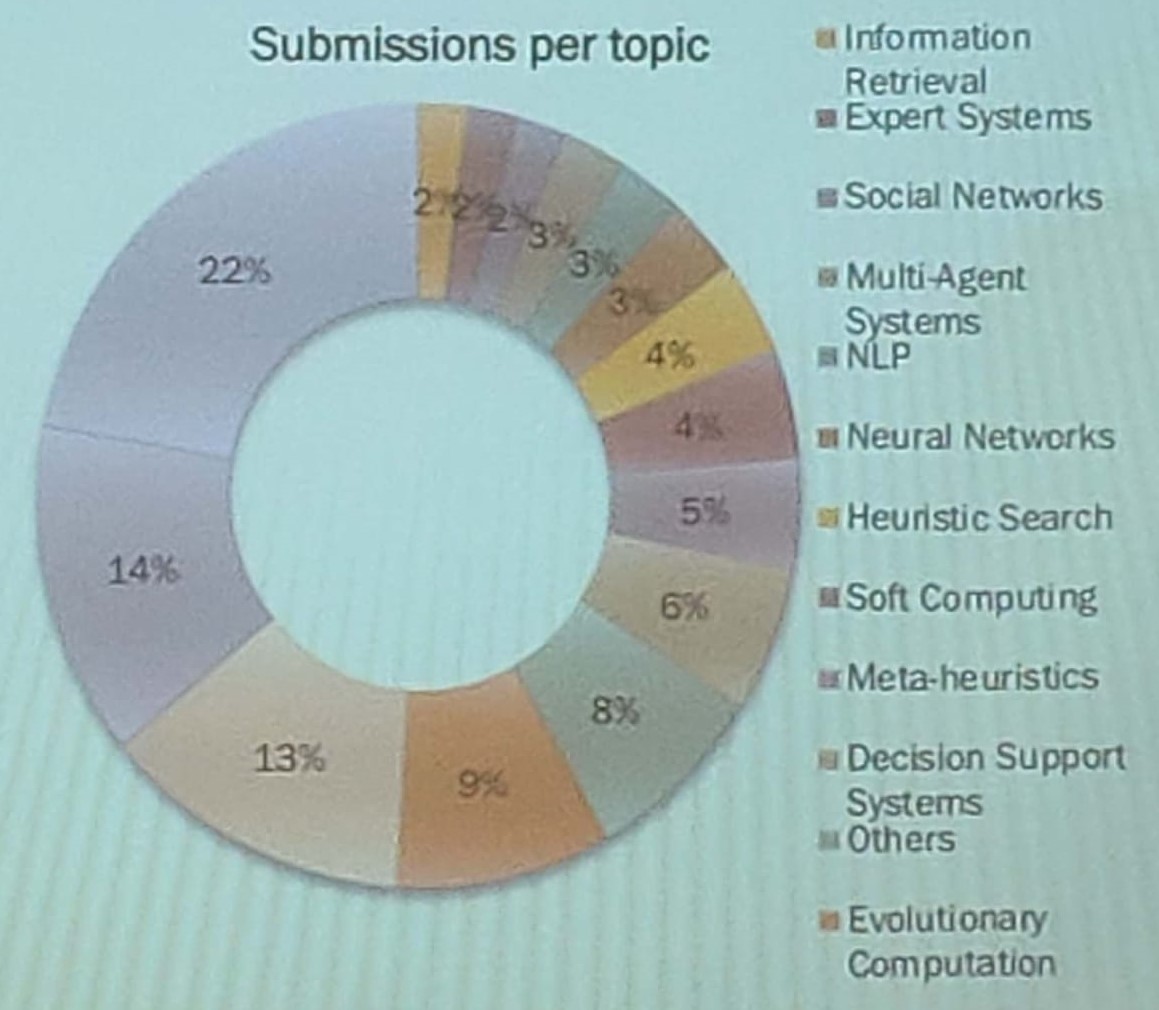

Here is a breakdown of topics of papers submitted to the conference (from the conference opening):

It was also announced that next year, IEA AIE 2019 will be held in Graz, Austria ( http://ieaaie2019.ist.tugraz.at/ ). Then, IEA AIE 2020 will be held in Japan.

I estimate that there was about 80 persons attending the conference.

Conference location

The conference was held at the Concordia University in Montreal, Canada. The university is in the nice downtown area of Montreal. The talks where held in some meeting rooms or classrooms of the university.

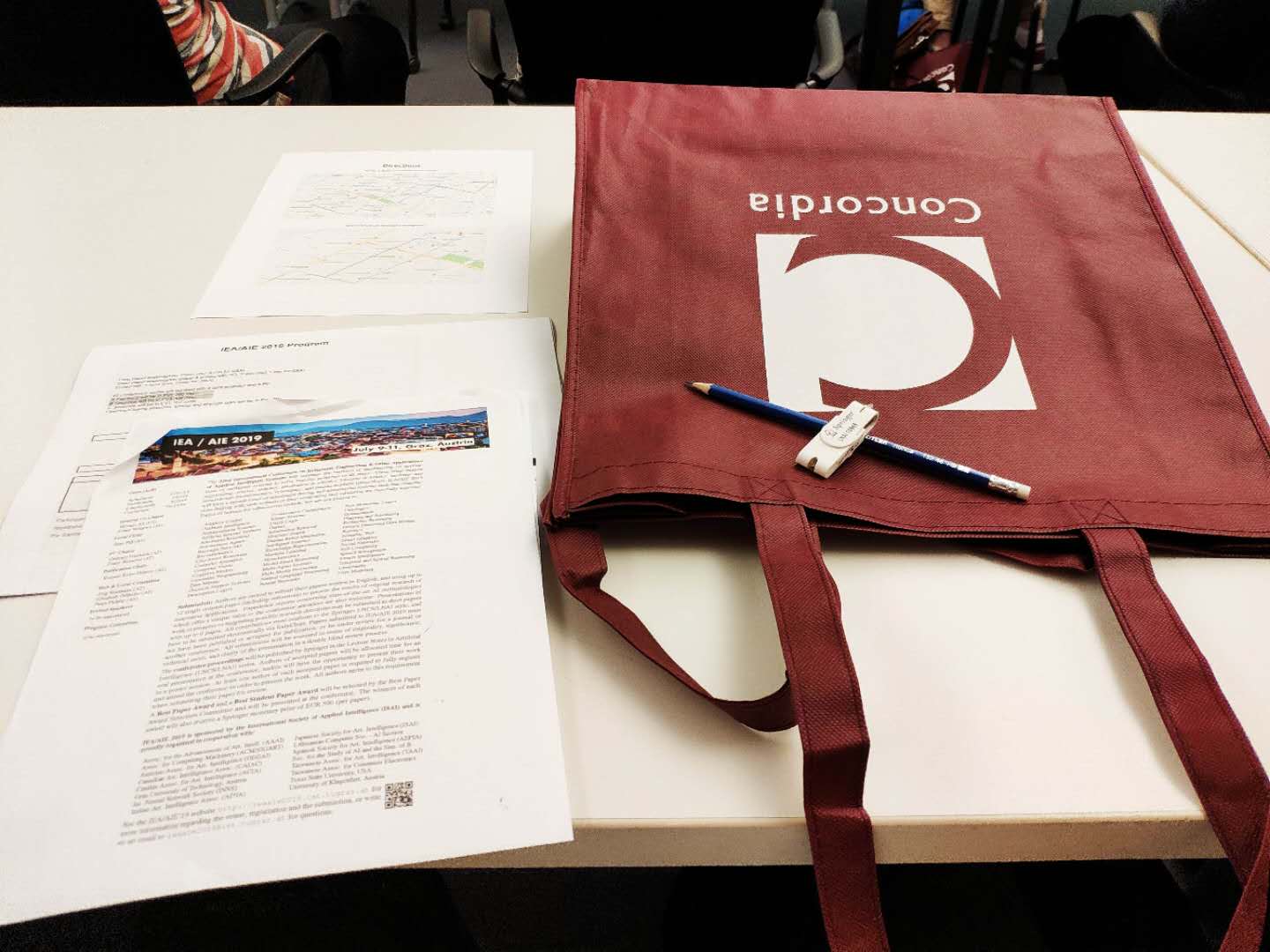

Conference materials

After registering, I received a conference bag with a few papers, a pen, a notepad and the proceedings on a USB stick.

At the end of the conference, free copies of the proceedings were offered on a first come first serve basis.

Keynote talk by Guy Lapalme on Question Answering Systems

The first keynote talk was given by Guy Lapalme from the University of Montreal, and was titled “Question-Answering Systems: challenges and perspectives“. It gave an overview of research on Query-Answering Systems. I will give a brief description of this talk, below.

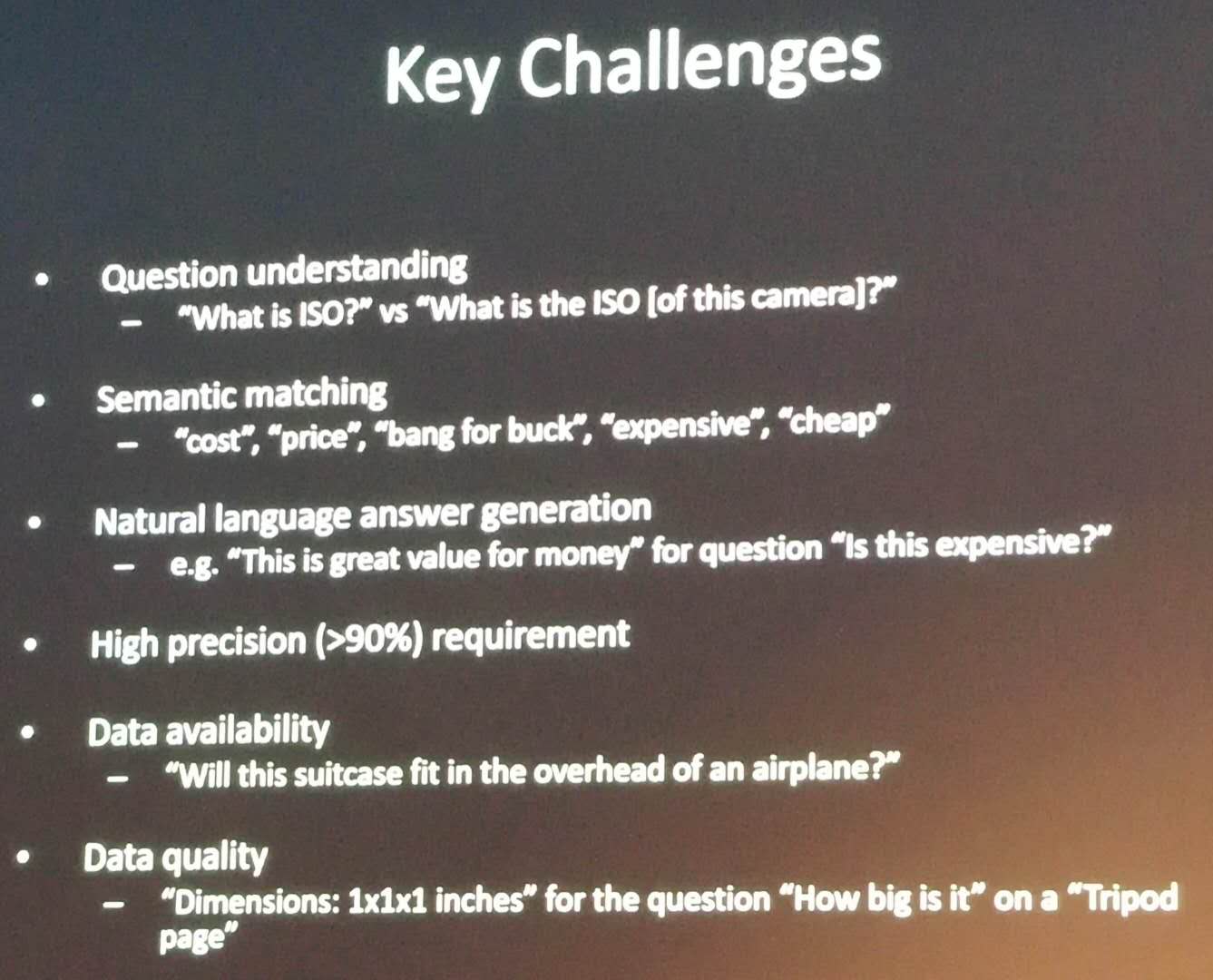

The speaker explained what is query answering by comparing it with information retrieval. Information retrieval is about finding documents. On the other hand, question-answering is not about finding documents but about finding answers to questions. A question-answering system according to Maybury (2004) is an interactive system, which can understands the user needs given in natural language, search for relevant data, knowledge and document, extract and prioritize answers, and shows and explain answers to the user.

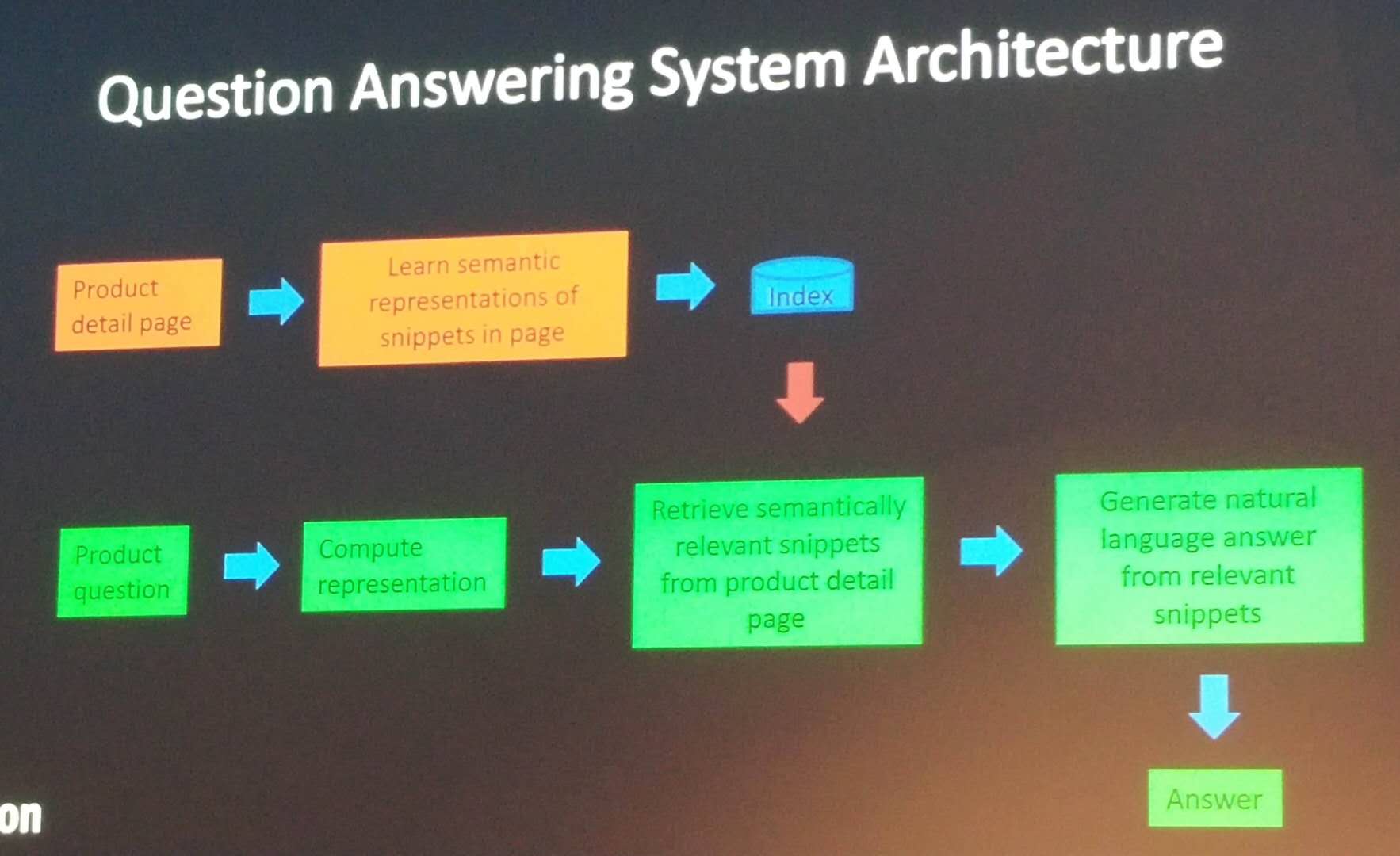

Building a question-answering system requires to consider several aspects related to natural language processing (question/document analysis, information extraction, natural language generation and discourse analysis), information retrieval (query building, document parsing and providing relevant feedback) and computer-human interaction (user preferences and interaction).

Several question answering systems will be build based on some assumptions. Some of these assumptions are that the user prefers an answer rather than a document, it is not necessary to look at the context, we are dealing with closed questions (not questions such as: what is the purpose of life?), answers are given as nominal phrases or numbers instead of a procedure.

Question-answering was studied since the 1970. Researchers found that obtaining the general understanding of a question and its answers is a very difficult problem. Thus, in the nineties, research was more focused on answering simple questions about facts such as “Where is the Taj Mahal?” or “Name a film which actor A acted”. Nowadays these questions are easily answered by some Web search engines. Then, how to answer more complex questions have been studied by researchers.

There are different types of question answering system. The most simple ones try to directly find verbatim answers in documents by keyword matching, and do simple reasoning. More advanced system can do analogical, spatial and temporal reasoning to answer more complicated questions such as “Is Canada still in recession?”. Some systems are also interactive (the system can remember previous questions and answers).

A simple question answering system has three main components respectively (1) to analyse questions to find the type of expected answers (who, what where, how? ) , (2) to analyze documents and find interesting sentences, and (3) to extract answer, evaluate the answers (correct vs incorrect vs correct but without backing from a document).

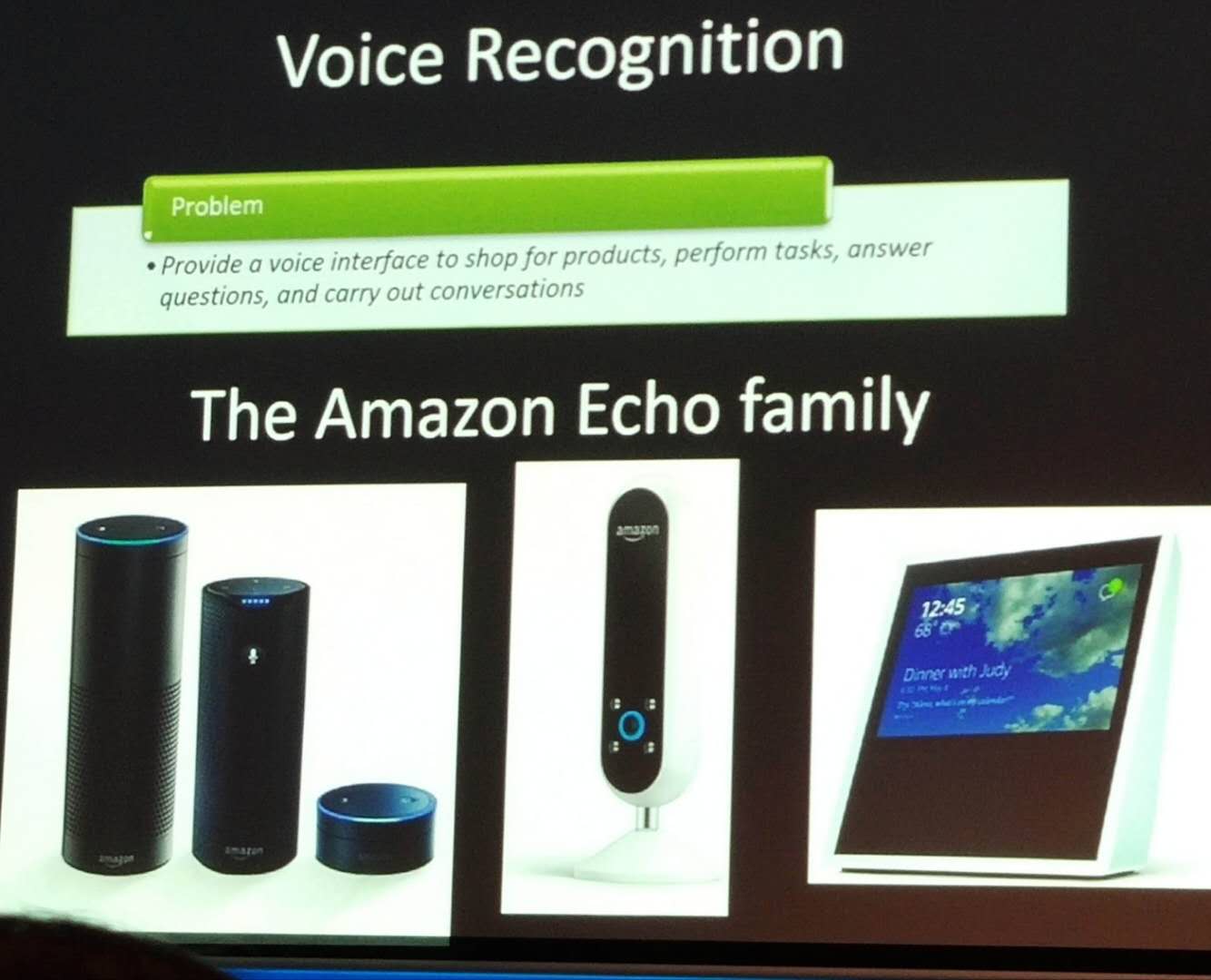

Commercial systems like Alexa, Google Assistant and Cortana can usually answer simple questions that are task oriented, and can sometimes maintain a conversation context.

There was then more explanations. The conclusion of the talk was that question answering is hard and is far from being a solved problem. Moreover, even if many question answering systems are restricted to specific tasks, they can already answer interesting services.

Conference reception

One of the highlight of this conference was that the conference reception was held at Musée Grévin, a wax museum. This was quite special and was also a good opportunity to discuss with other researchers.

Keynote talk by Far H. Behrouz on Autonomous and Connected Vehicles

The second keynote talk was by Far H. Behrouz from the University of Calgary. It gave an overview of technologies for autonomous and connected vehicles and prospects in that field.

The main motivations for autonomous vehicle development is to reduce pollution and improve safety, and increase transportation efficiency. The introduction of connected and autonomous vehicles can bring benefits but can also have impacts such as disruption and loss of jobs (e.g. truck drivers) and resources.

Some terms used to describes autonomous vehicles are “Autonomous Ground Vehicles (AGVs), “Unmaned Ground Vehicles (UGVs)” (autonomous, self-driving, driverless vehicles), and Intelligent Transportation Systems (ITSs). Some studies have suggested that autonomous vehicles could reduce road fatalities by 1,600 a year in Canada, and bring billions of dollars in economic benefits. Manufacturers have said that UGVs would be available in 2020-2025.

Developing autonomous vehicles requires many advanced technologies, including sensor technology, navigation technology, communication technology, algorithms (control, guidance), data technology, software technology (artificial intelligence, machine learning, personalized guidance), and computing infrastructure (e. cloud). Below, more details will be provided about each of them.

1) Sensor technology: many types of sensors are available from GPS, camera, odometer, laser scanner, LiDAR and Radar. A modern automobile may have 1500+ sensors.

2) Navigation technology: There are both visual and non visual navigation systems. Visual systems use technologies such as pattern recognition, and feature tracking and matching.

3) Communication technology: Initially, a major problem was the range of communication. Nowadays several standards have been proposed for long range communication, including cellular technology.

4) Algorithms: This includes algorithms for localization, guidance and control, cooperative multi-sensor localization, advanced driver assistance systems, etc.

5) Data technology: Large amount of structured and unstructured data must be handled. Performance, availability, data security, resiliency, management and monitoring are important.

6) Software technology: This includes artificial intelligence and machine learning techniques, multi-agent systems, personalized intelligent transportation solutions (monitoring and warning systems). It is also desirable to combine sensory information with real time data, navigation technology and artificial intelligence.

Personalized Intelligent Transportation System solutions

7) Computing infrastructure

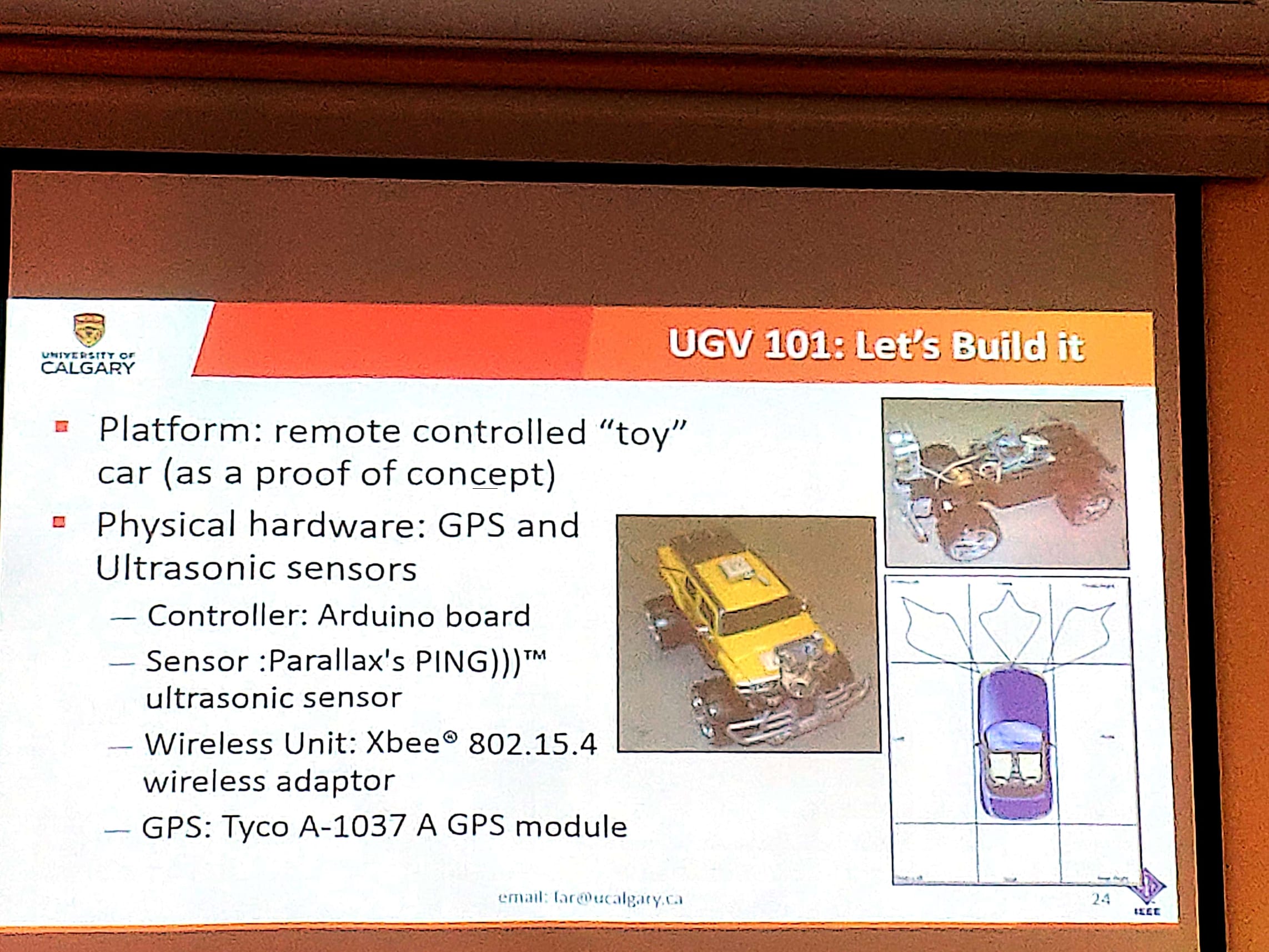

The speaker then explained that cheap Unmanned Ground Vehicles can be designed with technology that is available today. He has shown some simple examples.

The speaker then discussed about more technical details and more complex prototypes that were built in his lab.

Conference banquet

The conference banquet was at an archaeology museum called “Musée Pointe à Callières”. The dinner was fine.

Conclusion

That is all I wanted to write about the conference. It was not the first time that I have attended the IEA AIE conference. I have also attended it in 2009 and 2016. If you are curious, here is my report about IEA AIE 2016 in Japan.

—-

Philippe Fournier-Viger is a professor of Computer Science and also the founder of the open-source data mining software SPMF, offering more than 145 data mining algorithms.