Recently, I have attended the PAKDD 2024 conference, where I was happy to receive the most influential paper award with my co-authors. This award is a test of time type of award that is given to the paper from PAKDD 2014 that received the largest number of citations or had the largest impact over the last ten years. In this blog post, I will briefly talk about the story of this paper, and why it has been successful. Then, I will talk about some of the applications of the algorithms presented in this paper, and talk about how to get such award.

The paper

I have received the award with my co-authors for this paper:

Fournier-Viger, P., Gomariz, A., Campos, M., Thomas, R. (2014). Fast Vertical Mining of Sequential Patterns Using Co-occurrence Information. Proc. 18th Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD 2014) Part 1, Springer, LNAI, 8443. pp. 40-52. [ppt][source code]

This paper is about sequential pattern mining (SPM). Let me explain quickly what this is. SPM is a popular data mining task that is used to analyze sequence data. So what is a sequence? A sequence is an ordered list of symbols. For example, let me show you a few examples of sequences that we may find in some real-life applications.

In this first example, we have a sequence of customer purchases indicating that a customer has bought an apple, then some bread, and then some cake. But sequences can be found also in many other domains. Another example is a sequence of words in a text:

In that sequence the words are sequentially ordered. Another example of sequence from another domain is a sequence of locations visited by a person driving a car in a city:

If we have data represented as sequences, we can apply the task of sequential pattern mining to find patterns (subsequences) that appear frequently in those sequences. The idea is to discover patterns that could reveal something about those sequences. For example, we may want to analyze the sequences of purchases made by several customers to find some sequences of purchases common to multiple customers. Let me show you how sequential pattern mining works with a simple example. Consider a database of four sequences representing the purchases made by four different customers:

In that example, the letter a, b, c, and d represent the purchase of apple, bread, cake, and dattes, respectively. The first sequence called S_1 indicates that the first customer has bought apple and bread at the same time, followed by buying cake, and then by purchasing apple. The other three sequences (S_2, S_3 and S_4) have a similar meaning.

Now, if we want to do sequential pattern mining with this data, we must set a parameter called the minimum support threshold (abbreviated as minsup). Consider that we decide to set this parameter as minsup = 3. It means that we want to find all the sequential patterns (subsequences) that appear in at least 2 sequences of the inpput sequence database.

Let me show you what is the output for minsup = 3:

For the input sequence database on the left and minsup =3, the output is the list of sequential patterns that are presented on the right side of the above figure. Take the pattern <{a,b},{c}> as an example. This pattern is said to have a support of 3 (to appear three times) because it occurs in three sequences in the input database, as highlighted in yellow below:

As it can be seen in the above figure, apple and bread appear together and are followed by cake in the sequence S_1, S_2 and S_3. Hence, this pattern <{a,b},{c}> is said to have a support of 3, and since this value is no less than minsup, this pattern <{a,b},{c}> is also said to be a frequent sequential pattern and it is output.

It can be observed in the sequence S_2 that there can be a gap between {a,b} and {c}, and this is ok, since {a,b} still appears before {c}.

So to summarize, the task of sequential pattern mining is to find all the frequent sequential patterns in a database, given some minsup threshold set by the user. And a frequent sequential pattern is a subsequence that appears in at least minsup sequences.

In the above example, with minsup = 3, the output is 6 sequential patterns. For a task of sequential pattern mining such as in the above example, there is always only one solution, which is the set of patterns to be discovered. The challenge is in designing efficient algorithms to find this solution.

So what was the paper about? In that paper, we presented a new optimization called co-occurrence pruning that allows to considerably speed-up sequential pattern mining algorithms. The improvement obtained by our optimization can be for example to speed up an algorithm by up to 10 times. The improved algorithms in the paper are called CM-SPAM, CM-SPADE and CM-CLASP, which are improved versions of the classical SPAM, SPADE and CLASP algorithms.

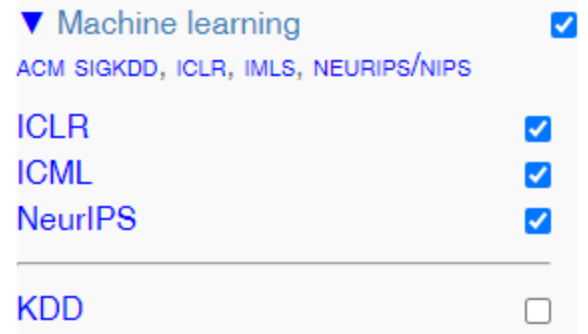

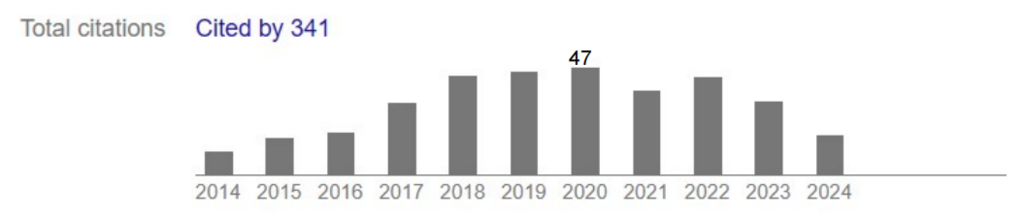

The paper has won the most influential paper award by having over 340 citations, according to Google Scholar, as shown below:

These citations are mainly of two types: (1) applications of the improved algorithms (CM-SPAM, CM-SPADE and CM-CLASP) in some real-life applications and (2) papers that have used the proposed optimization to develop other similar algorithms.

The story of this paper

So now, let me explain the story behind this paper by going back to 2013-2014. The paper was written by me and three co-authors, shown below:

At that time, I was a young professor working on pattern mining, and Antonio and Rincy were students, while Manuel was the supervisor of Antonio. But initially, we did not know each other.

From my side, I had started to develop the SPMF open-source pattern mining software in 2008, which is a free software in Java offering efficient implementations of many pattern mining algorithms. Then, around 2013, I received several e-mails from Rincy to discuss sequential pattern mining algorithms:

In particular, Rincy wanted to know which sequential pattern mining algorithm is the best. He really wanted to find the answer and did several experiments about this with SPMF, which were very interesting. But at that time, we did not have the source code of all the best algorithms to make an exhaustive comparison.

Then, I started to discuss by e-mail with Antonio from Spain, who had just published a paper at PAKDD 2023 about the CLASP algorithm for closed sequential pattern mining. Antonio accepted to share with me the code of many additional algorithms including GSP, SPADE, SPAM, PrefixSpan, CloSpan and CLASP.

So now, we had many algorithm implementations for comparing sequential pattern mining algorithms. I then continued discussing with Rincy through e-mails:

As I recall, he made an important observation, which is that vertical algorithms such as SPAM generate too many candidates, and the main cost of such algorithm is the join operation that is performed to calculate the support of each candidate. Thus, if we could find a way to reduce that number, we might be able to speed-up the algorithms…

Then, after that, based on that observation, I designed the new co-occurrence pruning optimization that is presented in the paper. The optimization was implemented by me and Antonio in several algorithms, and we have all participated together to the paper writing, and Manuel also helped for the paper. Then, we submitted it to PAKDD 2014…. and it got accepted!

At that time, as I remember, the paper was accepted but maybe had two accept and a weak accept recommendations. Thus, it was not regarded as the best paper of PAKDD 2014, but 10 years later, it is arguably the paper that had the biggest impact. From this, we may draw the conclusion that reviewers are not always right. 🙂

Why it was successful?

I believe that the main reasons why the paper was successful are the following:

- – The paper is about a fundamental topic (sequential pattern mining) that can have applications in many fields.

- – We have shown a clear performance improvement over the state-of-the-art algorithms and compared with many algorithms on several datasets.

- – I promoted the paper by talking about it to other researchers in numerous occasions, by having a website, a blog, and also mentioning this paper in several of my own papers, including a survey on sequential pattern mining.

- – I published the source code of the algorithms and datasets in the SPMF pattern mining software. Thus, it is easy for anyone to reuse my code, apply it to other domains or extend it.

Applications

The algorithms from that paper and from the SPMF pattern mining software in general, have been used in a wide range of applications from multiple fields. Some are listed in the picture below:

In particular, two representative applications of the sequential pattern mining algorithm CM-SPAM are presented in those two papers written by my team:

Nawaz, M. S., Fournier-Viger, P., Nawaz, M. Z., Chen, G., Wu, Y. (2022) MalSPM: Metamorphic Malware Behavior Analysis and Classification using Sequential Pattern Mining. Computers & Security, Elsever, to appearDOI: 10.1016/j.cose.2022.102741

Nawaz, S. M., Fournier-Viger, P., He, Y., Zhang, Q. (2023). PSAC-PDB: Analysis and Classification of Protein Structures. Computers in Biology and Medicine, Elsevier, 158: 106814 (2023)

DOI: 10.1016/j.compbiomed.2023.106814

In the first paper above, we have applied sequential pattern mining to analyze the behavior of malware programs such as computer viruses, worms and trojans. In this case, the data are sequences of API calls made by programs, and we extract sequential patterns to detect (classify) different types of virus. More precisely, the sequential patterns were used as features to train different classifiers, and excellent performance was achieved over the state-of-the art approaches.

In the second paper above, a similar sequential pattern mining-based methodology is used but for analyzing biological viruses. The sequences are in this case genome sequences. Excellent results are also obtained.

Those two papers are examples, but in fact, sequential pattern mining can be applied in numerous other domains.

How to get such award?

So before concluding, how to get this award? I provide a summary in the picture below:

Conclusion

I hope that you have enjoyed this blog post! If you have any comments, you may write in the comment section below.

—

Philippe Fournier-Viger is a distinguished professor working in China and founder of the SPMF open source data mining software.