In this blog post, I will talk about the recent trends of journal editors rejecting papers because of their similarity with other papers as detected by the CrossCheck system. I will explain how this system works and talk about its impact, benefits and drawbacks.

What is similarity checking?

Nowadays, when an author submit a paper to a well-known academic journal (from publishers such as Springer, Elsevier and IEEE), the editor will first submit the paper to an online system to check if the paper contain plagiarism. That system will compare the paper with papers from a database created by various publishers and websites to check if the paper is similar to some existing documents. Then, a report is provided to the journal editor indicating if there is some similarity with existing documents. In the case where the similarity is high or some key parts have clearly plagiarised from other authors, the editor will typically reject the paper. Otherwise, the editor will submit the paper to reviewers and then start the normal review process.

Why checking the similarity with other papers?

There are two reasons why editors perform this similarity check:

- to quickly detect plagiarized papers that should clearly not be published.

- to check if a paper from an author is original (i.e. if it is not too similar to previous papers from the same author).

In the second case, some journal editors will say for example that the “similarity score” should be below 20% or 40%, depending on the journal. Thus, under this model, an author is allowed to reuse just little bit of text in his own papers.

How does it works?

Now you perhaps wonder how that similarity score is calculated. Having access to some similarity report generated by the CrossCheck system, I will provide information about what these reports looks like and then explain some key aspects of this system.

After the editor submit a paper to CrossCheck, he receives a report. This report contains a summary page that looks like this:

Part of a CrossCheck similarity report

This report gives an overall similarity score of 32%. It can be interpreted as that on overall 32 % of the content of the text matches with existing documents. It is furthermore said that 4 % is a match with internet sources, 31% with some other publications and 2% with student papers. And as it can be observed, 31% + 2% + 4 % does not add up to 32%. Why? Actually, the calculation of the similarity score is misleading. I do not have access to how the score is calculated but I found some explanation online. It is that the similarity score is computed by matching each part of a text with at most one document. In other words, if some paragraph of a submitted paper match with two existing documents, this paragraph will be counted only once in the overall score of 32 %.

In the above picture, it is also observed that there is a 23 % match with a another document (called #1), a 1% match with a document (#2), less than 1% match with a document (#3), and so on until some document (#9).

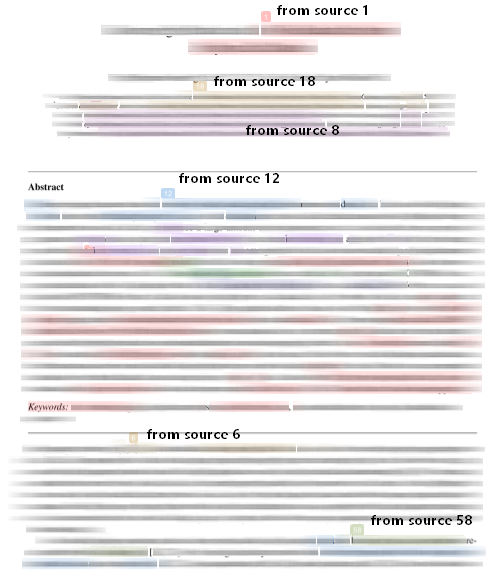

An annotated pdf is also provided to the editor, which highlights the parts that are matching with the documents. For example, I show some page of such report below, where I have blurred the text for anonymization:

Detailed similarity comparison (blurred)

In such report, matching parts are highlighted in different colors and some number indicates which documents has been matched to which part of the text. For example, the title of the paper is said to partly match with document #1, and the abstract is said to partly match with document #18 and #8.

Limitations of this similarity checking systems

I will now describe some problems that I have observed about reports generated by this similarity checking system:

- In the above report, the countries of authors and their affiliations is considered as matching with their previous documents, which increases the similarity score. But obviously, this should not be taken into account. Can we blame an author for using the same affiliations in two papers or for having the same affiliation as another author?

- Keywords are also considered as matching with previous documents. But I don’t think that using the same keywords as another paper should be an issue.

- Some of the matches are some very generic expressions or sentences that are used in many papers such as “explained in the next section” or “this paper is organized as follows”.

- Another limitation is that this similarity check completely ignores all the figures or illustrations. Thus if an author extends a conference paper as a journal paper and add many figures for experiments to differentiate his two papers, these figures will be completely ignored in the similarity score.

- The similarity checking system is limited to the text content of the paper. It can check the main text and the text in the tables, algorithms, math formulas, biography and affiliations. But it cannot check the text in figures that are included as bitmaps (pictures) in a paper. For example, if one includes an algorithm in a paper as a bitmap instead of as text, then the system will ignore that content. The system will only be able to compare the labels of the figures and not their content. Thus, an author with malicious intent could easily hide content from the matching system by transforming some content of an article as pictures.

- In the report that I have analyzed, I have found that the bibliography is also considered when computing the similarity score. Obviously this is unfair. Citing the same references as some other papers (especially when it is from the same author) is not plagiarism. In the case of the report that I have read, about 90% of the references were considered as matching those of several other documents, which increased the similarity score by probably at least 10%. But I have noticed that the editor can deactivate this function.

- I have also observed that the system can also match the biography of authors at the end of the paper and the acknowledgements with those of their previous papers. This is also a problem. It is clearly not plagiarism to reuse the same biography or acknowledgement in two papers. But in that system, it is counted in the similarity score.

Thus, I think that this system is quite imperfect.

What are the impact of this system?

The major impact is that many plagiarized papers can be detected early which is a good thing, as detecting these papers can save a lot of time to editor and reviewers.

However, a drawback of this system is that these metrics are clearly imperfect and there is a real danger that some editor just check the similarity score to take a decision on a paper and do not read a report carefully. For example, I have heard that some journals simply apply some arbitrary thresholds such as rejecting all papers with a score >= 30 %. This is in my opinion a problem if that threshold is too low because in some cases it is justified that an author reuses text from his own previous papers. For example, an author may want to reuse some basic problem definitions from his own paper in a second paper with a different contributions. Or an author may want to extend a conference into a journal paper with some new contributions. In such case, I think that accepting some overlap between papers is reasonable.

A few years ago, when such system were not in use, it was quite common that some authors would extend a conference paper into a journal paper by adding 50 % more content. Today, with this system, I think that this may not be allowed anymore, maybe forcing authors to avoid publishing early results in conference papers (or otherwise having to spend extra time to rewrite their paper in a different way to extend it as a journal paper). For example, one may be forced to rewrite definitions and theorems that are reused and cited from another of his own paper to reduce the similarity score.

Another aspect is that such system needs to create a database of all papers. But should the authors have to agree so that their papers are put in this database? Probably not because when a paper is published, the authors typically have to give the copyright to the publisher. Thus, I guess that the publisher is free to share the paper with such similarity checking service. But still, it raises some questions. If we make a comparison, there exists a homework plagiarism checking system called TurnItIn. This system have been actually legally challenged in the US and Canada, where some students have won some court battle so that their homework are not submitted/included in the system’s database. Although, it is a slightly different situation, we could imagine that some researchers may also want to avoid including their research papers in similarity checking system databases.

How to get a similarity checking report for your paper?

Checking the similarity of a paper is not free. However, many journal editors or associate editors have a subscription to use similarity checking services for free. Thus, if you know an editor or associate editor that has a subscription, he may perhaps be able to generate a report for your paper for free. Otherwise, one could pay to obtain the service.

Conclusion

In this blog post, I provided an overview of the similarity checking system called CrossCheck used by several publishers and journals. I also talked about how similarity scores appear to be computed and some limitations of this system, and its impact on the academic world. Hope this has been interesting. Please share your comments in the comment section below.

—

Philippe Fournier-Viger is a professor of Computer Science and also the founder of the open-source data mining software SPMF, offering more than 150 data mining algorithms.

Pingback: Turnitin, a smart tool for plagiarism detection? | The Data Mining Blog