In this blog post, I will give a brief overview of an important subfield of data mining that is called pattern mining. Pattern mining consists of using/developing data mining algorithms to discover interesting, unexpected and useful patterns in databases.

Pattern mining algorithms can be applied on various types of data such as transaction databases, sequence databases, streams, strings, spatial data, graphs, etc.

Pattern mining algorithms can be designed to discover various types of patterns: subgraphs, associations, indirect associations, trends, periodic patterns, sequential rules, lattices, sequential patterns, high-utility patterns, etc.

But what is an interesting pattern? There are several definitions. For example, some researchers define an interesting pattern as a pattern that appears frequently in a database. Other researchers wants to discover rare patterns, patterns with a high confidence, the top patterns, etc.

In the following, I will give examples of two types of patterns that can be discovered from a database.

Example 1. Discovering frequent itemsets

The most popular algorithm for pattern mining is without a doubt Apriori (1993). It is designed to be applied on a transaction database to discover patterns in transactions made by customers in stores. But it can also be applied in several other applications. A transaction is defined a set of distinct items (symbols). Apriori takes as input (1) a minsup threshold set by the user and (2) a transaction database containing a set of transactions. Apriori outputs all frequent itemsets, i.e. groups of items shared by no less than minsup transactions in the input database. For example, consider the following transaction database containing four transactions. Given a minsup of two transactions, frequent itemsets are “bread, butter”, “bread milk”, “bread”, “milk” and “butter”.

T1: bread, butter, spinach

T2: butter, salmon

T3: bread, milk, butter

T4: cereal, bread milk

Fig. 1. a transaction database

Apriori can also apply a post-processing step to generate “association rules” from frequent itemsets, which I will not discuss here.

The Apriori algorithm has given rise to multiple algorithms that address the same problem or variations of this problem such as to (1) incrementally discover frequent itemsets and associations , (2) to discover frequent subgraphs from a set of graphs, (3) to discover subsequences common to several sequences, etc.

R. Agrawal and R. Srikant. Fast algorithms for mining association rules in large databases. Research Report RJ 9839, IBM Almaden Research Center, San Jose, California, June 1994.

To know more about this topic, two papers give a good overview of itemset mining techniques:

- Fournier-Viger, P., Lin, J. C.-W., Vo, B, Chi, T.T., Zhang, J., Le, H. B. (2017). A Survey of Itemset Mining. WIREs Data Mining and Knowledge Discovery, Wiley, e1207 doi: 10.1002/widm.1207, 18 pages.

- Luna, J. M., Fournier-Viger, P., Ventura, S. (2019). Frequent Itemset Mining: a 25 Years Review. WIREs Data Mining and Knowledge Discovery, Wiley, 9(6):e1329. DOI: 10.1002/widm.1329

Example 2. Discovering sequential rules

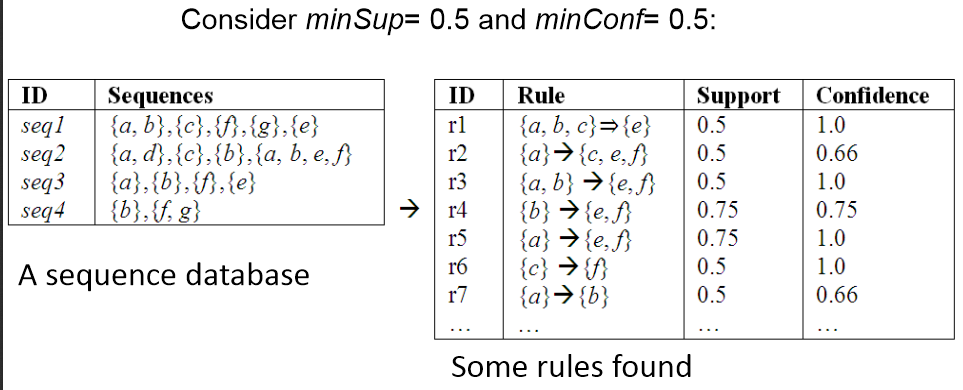

The second example that I will give is to discover sequential rules in a sequence database. A sequence database is defined as a set of sequences. A sequence is a list of transactions (as previously defined). For example in the left part of the following figure a sequence database containing four sequences is shown. The first sequence contains item a and b followed by c, followed by f, followed by g, followed by e. A sequential rule has the form X –> Y where X and Y are two distinct non empty sets of items. The meaning of a rule is that if the items of X appears in a sequence in any order, they will be followed by the items of Y in any order. The support of a rule is the number of sequence containing the rule divided by the total number of sequences. The confidence of a rule is the number of sequence containing the rule divided by the number of sequences containing its antecedent. The goal of sequential rule mining is to discover all sequential rules having a support and confidence no less than two thresholds given by the user named “minsup” and “minconf” . For example, on the right part of the following figure some sequential rules are shown for minsup=0.5 and minconf=0.5, discovered by the RuleGrowth algorithm.

For more details about sequential rule mining, this paper presents the RuleGrowth algorithm (I’m the author of that paper).

Fournier-Viger, P., Nkambou, R. & Tseng, V. S. (2011). RuleGrowth: Mining Sequential Rules Common to Several Sequences by Pattern-Growth. Proceedings of the 26th Symposium on Applied Computing (ACM SAC 2011). ACM Press, pp. 954-959.

Pattern mining is often viewed as techniques to explain the past by discovering patterns. However, patterns found can also be used for prediction. As an example of application, the following paper shows how sequential rules can be used for predicting the next webpages that will be visited by users on a website, with a higher accuracy than using sequential patterns (named “classic sequential rules” in that paper):

Fournier-Viger, P. Gueniche, T., Tseng, V.S. (2012). Using Partially-Ordered Sequential Rules to Generate More Accurate Sequence Prediction. Proc. 8th International Conference on Advanced Data Mining and Applications (ADMA 2012), Springer LNAI 7713, pp. 431-442.

Implementations of pattern mining algorithms

If you want to know more information about pattern mining, most of the general data mining books such as the book of Han & Kamber and Tan, Steinbach & Kumar have at least one chapter devoted to pattern mining.

If you want to test pattern mining algorithms, I recommend to have a look at the SPMF data mining library (I’m the project founder), which offers the Java source code of more than 55 pattern mining algorithms, with simple examples, and a simple command line and graphical user interface for quickly testing the algorithms.

Conclusion

Pattern mining is a subfield of data mining that has been active for more than 20 years, and is still very active. Pattern mining algorithms have a wide range of applications. For example, the Apriori algorithm can also be applied to optimized bitmap index of data wharehouse. In this blog post, I have given two examples to give a rough idea of what is the goal of pattern mining. However, note that it is not possible to summarize twenty years of research in a single blog post!

Hope that this post was interesting! If you like this blog, you can subscribe to my RSS Feed or Twitter account (https://twitter.com/philfv) to get notified about future blog posts. Also, if you want to support this blog, please tweet and share it!

–

P. Fournier-Viger is the founder of the Java open-source data mining software SPMF, offering more than 50 data mining algorithms.

This introduction helped me a lot! Thank you very much for this awesome post 🙂

Pingback: Brief report about the 16th Industrial Conference on Data mining 2016 (ICDM 2016) - The Data Mining & Research Blog

Pingback: Brief report about the 12th International Conference on Machine Learning and Data Mining conference (MLDM 2016) - The Data Mining & Research Blog

Pingback: An Introduction to High-Utility Itemset Mining - The Data Mining & Research Blog

Pingback: How to auto-adjust the minimum support threshold according to the data size - The Data Mining & Research Blog

Pingback: Big Problems only found in Big Data? - The Data Mining & Research Blog

kindly explain pattern mining for progressive database

Hi, a progressive database is a database that is updated by either adding, deleting or modifying the data stored in the database. A frequent pattern mining designed for progressive databases would update the results (the patters found) when the database changes. This type of algorithms are also called “incremental algorithms”. There have been many papers on this topic for various types of patterns, You may check them for more details. Besides, another approach for dealing with databases that are updated is to use the Iemset Tree structure or Memorry Efficient Itemset Tree (MEIT). Those structures are designed for querying a database in real-time.

Hope this helps.

Thanks for the post. Found it very helpful.

Hi, you are welcome. Glad it is useful.

Philippe

very helpful. May I ask does that mean for the example above three rules below will have same supp. and conf.?

{a,b,c} > {e} (this is r1)

{a,b} > {c,e}

{a} > {b,c,e}

Hi, Glad it is helpful. Here is the answer to your question:

The rule {a,b,c} > {e} appears in two sequences because the items a, b,c appears in any order before item e in two sequences (sequence 1 and sequence 2). Thus the support of this rule is 2 sequences / 4 sequences = 0.5 or 50%.

The rule {a,b} > {c, e} appears in one sequences because the items a,b appears in any order before items c,e in any order, in only one sequence (sequence 1). This rule does not appear in sequence 2 because in that sequence b is after c.

To say that the rule appears in a sequence a and b must be both before c and e.

Thus the support of this rule is 1 sequences / 4 sequences = 0.25 or25%.

The rule {a} > {b,c,e} appears in two sequences because the item a appears before the items b, c e in two sequences (sequence 1 and sequence 2). Thus the support of this rule is 2 sequences / 4 sequences = 0.5 or 50%.

Ok, so now about the confidence, the calculation is as follows

The confidence of {a,b,c} > {e} is the number of times that {a,b,c} > {e} appears divided by the number of sequences that contains a,b,c in any order. Thus, the confidence of {a,b,c} > {e} is 2 sequences / 2 sequences = 100 %.

The confidence of {a,b} > {c, e} is the number of times that {a,b} > {c, e} appears divided by the number of sequences that contains a,b in any order. Thus, the confidence of {a,b} > {c, e} is 1 sequence / 3 sequences = 33 %.

The confidence of {a} > {b,c,e} is the number of times that {a} > {b,c,e} appears divided by the number of sequences that contains a in any order. Thus, the confidence of {a,b,c} > {e} is 2 sequences /3 sequences = 66 %.

By the way, what i have described here is called “sequential rules“. There also exists many other types of patterns that you can discover in sequences or transactions. If you have a look at the SPMF library (that i am the founder, you will see more than 100 algorithms. Each of them has a simple example on the “documentation” page.

http://www.philippe-fournier-viger.com/spmf/index.php?link=documentation.php

Thank you

oh…it’s hard:(

Pingback: (video) Discovering interpretable high utility patterns in databases - The Data Mining BlogThe Data Mining Blog

Pingback: The PAKDD 2019 conference (a brief report) - The Data Mining BlogThe Data Mining Blog

Pingback: The future of pattern mining - The Data Mining BlogThe Data Mining Blog

Pingback: An Introduction to Sequential Pattern Mining - The Data Mining BlogThe Data Mining Blog

Pingback: What is the difference between data mining and machine learning? | The Data Mining Blog

Pingback: An Overview of Pattern Mining Techniques (by data types) | The Data Mining Blog

Pingback: Towards SPMF v3.0… | The Data Mining Blog

Pingback: New videos about pattern mining | The Data Mining Blog

Pingback: How to Analyze the Complexity of Pattern Mining Algorithms? | The Data Mining Blog

Pingback: SPMF 2.55 is released (10 new algorithms!) | The Data Mining Blog

Pingback: A brief report about IEEE DSAA 2022 | The Data Mining Blog

Pingback: Free Pattern Mining Course | The Data Blog

Pingback: An introduction to frequent subgraph mining | The Data Blog

Pingback: The story of the most influential paper award of PAKDD 2024 | The Data Blog