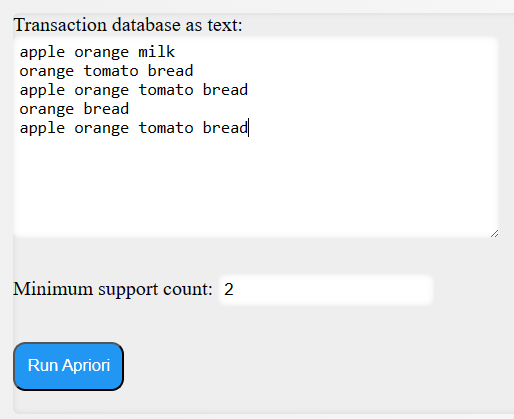

Sequential pattern mining (SPM) is a data mining technique that aims to discover patterns or subsequences that occur frequently in sequential data. Sequential data can be found in various domains such as customer transactions, web browsing, text analysis, and bioinformatics. SPM can help reveal interesting and useful insights from such data, such as customer behavior, website navigation, text structure, and gene expression.

Because I often receive the questions about what the applications of sequential pattern mining are, I will talk about this in this blog post. I will talk about several example applications of sequential pattern mining, including malware detection, COVID-19 analysis, e-learning and point-of-interest recommendation. I will briefly explain how SPM can be used for these tasks and cite some research papers that have applied SPM for these purposes.

Note: The list of applications in this page is far from exhaustive. There are hundreds of applications, and I also do not cite all the papers as there are too many. I give some of my papers as examples as I am familiar with the content.

Sequential Pattern Mining for Malware Detection

Malware is a malicious software that can harm computers and networks by stealing data, encrypting files, disrupting services, or spreading viruses. Malware detection is the task of identifying and classifying malware based on their behavior and characteristics. One of the challenges of malware detection is that malware can change their appearance or code to evade detection by using techniques such as obfuscation, packing, encryption, or metamorphism.

One way to overcome this challenge is to analyze the behavior of malware during execution, rather than their static code. A common way to represent the behavior of malware is by using the Application Programming Interface (API) calls that they make to interact with the operating system or other applications. API calls can reflect the actions and intentions of malware, such as creating files, modifying registry keys, sending network packets, or injecting code.

Sequential pattern mining can be used to discover frequent patterns of API calls made by different malware families or variants. These patterns can then be used as features for malware classification or clustering. For example, Nawaz et al. (2022) proposed MalSPM, an intelligent malware detection system based on sequential pattern mining for the analysis and classification of metamorphic malware behavior. They used a dataset that contains sequences of API calls made by different malware on Windows OS and applied SPM algorithms to find frequent API calls and their patterns. They also discovered sequential rules between API call patterns as well as maximal and closed frequent API call patterns. They then used these patterns as features for seven classifiers and compared their performance with existing state-of-the-art malware detection approaches. They showed that MalSPM outperformed these approaches in terms of accuracy, precision, recall, F1-score, and ROC-AUC.

Research papers:

Nawaz, M. S., Fournier-Viger, P., Nawaz, M. Z., Chen, G., Wu, Y. (2022) MalSPM: Metamorphic Malware Behavior Analysis and Classification using Sequential Pattern Mining. Computers & Security, Elsever,

Blog post:

How to Detect and Classify Metamorphic Malware with Sequential Pattern Mining (MalSPM)

Research paper on related topic of botnet detection:

Daneshgar FF, Abbaspour M. A two-phase sequential pattern mining framework to detect stealthy P2P botnets. Journal of Information Security and Applications. 2020 Dec 1;55:102645.

Sequential Pattern Mining for Healthcare

COVID-19 is a novel coronavirus disease that emerged in late 2019 and has since spread to more than 200 countries, causing more than 5 million deaths worldwide. COVID-19 genome sequencing is critical to understand the virus behavior, its origin, how fast it mutates, and for the development of drugs/vaccines and effective preventive strategies.

SPM can be used to learn interesting information from COVID-19 genome sequences, such as frequent patterns of nucleotide bases and their relationships with each other. These patterns can help examine the evolution and variations in COVID-19 strains and identify potential mutations that may affect the virus infectivity or virulence. For example, Nawaz et al. (2021) applied SPM on a corpus of COVID-19 genome sequences to find interesting hidden patterns. They also applied sequence prediction models to evaluate if nucleotide bases can be predicted from previous ones. Moreover, they designed an algorithm to find the locations in the genome sequences where the nucleotide bases are changed and to calculate the mutation rate. They suggested that SPM and mutation analysis techniques can reveal interesting information and patterns in COVID-19 genome sequences.

Research papers:

Nawaz, S., Fournier-Viger, P., Aslam, M., Li, W., He, Y., Niu, X.(2023). Using Alignment-Free and Pattern Mining Methods for SARS-CoV-2 Genome Analysis. Applied Intelligence.

Nawaz, S. M., Fournier-Viger, P., He, Y., Zhang, Q. (2023). PSAC-PDB: Analysis and Classification of Protein Structures. Computers in Biology and Medicine, Elsevier,

Blog post: Analyzing the COVID-19 genome with AI and data mining techniques (paper + data + code)

Another application of SPM for COVID-19 analysis is to discover sequential patterns from clinical records of patients who have been diagnosed with COVID-19 or are at risk of developing it. These patterns can help identify risk factors, symptoms, comorbidities, treatments, outcomes, or complications associated with COVID-19. For example, Cheng et al. (2017) proposed a framework for early assessment on chronic diseases by mining sequential risk patterns with time interval information from diagnostic clinical records using SPM and classification modeling techniques.

Research paper:

Cheng YT, Lin YF, Chiang KH, Tseng VS. (2017) Mining Sequential Risk Patterns From Large-Scale Clinical Databases for Early Assessment of Chronic Diseases: A Case Study on Chronic Obstructive Pulmonary Disease. IEEE J Biomed Health Inform. 2017 Mar;21(2):303-311.

Related papers:

Ou-Yang, C., Chou, S. C., Juan, Y. C., & Wang, H. C. (2019). Mining Sequential Patterns of Diseases Contracted and Medications Prescribed before the Development of Stevens-Johnson Syndrome in Taiwan. Applied Sciences, 9(12), 243

Lee, E. W., & Ho, J. C. (2019). FuzzyGap: Sequential Pattern Mining for Predicting Chronic Heart Failure in Clinical Pathways. AMIA Summits on Translational Science Proceedings, 2019, 222.

Sequential pattern mining for E-learning

E-learning is the use of electronic media and information technologies to deliver education and training. E-learning systems have undergone rapid growth in the current decade and have generated a tremendous amount of e-learning resources that are highly heterogeneous and in various media formats. This information overload has led to the need for personalization in e-learning environments, to provide more relevant and suitable learning resources to learners according to their individual needs, objectives, preferences, characteristics, and learning styles.

SPM can be used to discover sequential patterns of learners’ behavior and interactions with e-learning systems, such as the sequences of learning resources accessed, the sequences of learning activities performed, or the sequences of learning outcomes achieved. These patterns can help understand the learning processes and preferences of learners, identify their strengths and weaknesses, provide feedback and guidance, recommend appropriate learning resources or activities, predict their performance or satisfaction, and improve the design and evaluation of e-learning systems.

There are many papers related to this. Some of the earliest papers were made by me during my Ph.D studies (in 2008).

Research papers:

Song, W., Ye, W., Fournier-Viger, P. (2022). Mining sequential patterns with flexible constraints from MOOC data. Applied Intelligence, to appear.

DOI : 10.1007/s10489-021-03122-7

Fournier-Viger, P., Nkambou, R., Mayers, A. (2008). Evaluating Spatial Representations and Skills in a Simulator-Based Tutoring System. IEEE Transactions on Learning Technologies (TLT), 1(1):63-74.

DOI: 10.1109/TLT.2008.13

Fournier-Viger, P., Nkambou, R & Mephu Nguifo, E. (2008), A Knowledge Discovery Framework for Learning Task Models from User Interactions in Intelligent Tutoring Systems. Proceedings of the 7th Mexican Intern. Conference on Artificial Intelligence (MICAI 2008). LNAI 5317, Springer, pp. 765-778.

Yau, T. S. Mining Sequential Patterns of Students’ Access on Learning Management System. Data Mining and Big Data, 191.

王文芳 (2016) Learning Process Analysis Based on Sequential Pattern Mining in a Web-based Inquiry Science Environment.

Rashid, A., Asif, S., Butt, N. A., Ashraf, I. (2013).Feature Level Opinion Mining of Educational Student Feedback Data using Sequential Pattern Mining and Association Rule Mining. International Journal of Computer Applications 81.

Point-of-Interest Recommendation

Point-of-interest (POI) recommendation is the task of recommending places or locations that users may be interested in visiting based on their preferences, context, and history. POI recommendation has many applications such as tourism, navigation, social networking, advertising, and urban planning. POI recommendation can help users discover new places that match their interests and needs, save time and effort in searching for information, enhance their travel experience and satisfaction, and increase their loyalty and engagement with service providers.

SPM can be used to discover sequential patterns of users’ mobility behavior and preferences, such as the sequences of POIs visited by users within a day or a trip. These patterns can help understand the travel patterns and preferences of users, identify their travel purposes and intentions, provide feedback and guidance, recommend appropriate POIs or routes based on their travel history or context, predict their next destination or activity, and improve the design and evaluation of POI recommendation systems.

Research papers:

Amirat, H., Lagraa, N., Fournier-Viger, P., Ouinten, Y., Kherfi, M. L., Guellouma, Y. (2022) Incremental Tree-based Successive POI Recommendation in Location-based Social Networks . Applied Intelligence, to appear.

Lee, G. H., & Han, H. S. (2019). Clustering of tourist routes for individual tourists using sequential pattern mining. The Journal of Supercomputing, 1-18.

Yang, C., & Gidófalvi, G. (2017). Mining and visual exploration of closed contiguous sequential patterns in trajectories. International Journal of Geographical Information Science, 1-23.

Some other applications

Theorem proving

Nawaz, M. S., Sun, M., & Fournier-Viger, P. (2019, May). Proof guidance in PVS with sequential pattern mining. In International Conference on Fundamentals of Software Engineering (pp. 45-60). Springer, Cham.

Fire spot identification

Istiqomah, N. (2018). Fire Spot Identification Based on Hotspot Sequential Pattern and Burned Area Classification. BIOTROPIA-The Southeast Asian Journal of Tropical Biology, 25(3), 147-155.

Sentiment analysis

Rana, T. A., & Cheah, Y. N. (2018). Sequential patterns-based rules for aspect-based sentiment analysis. Advanced Science Letters, 24(2), 1370-1374.

Pollution analysis

Yang, G., Huang, J., & Li, X. (2017). Mining Sequential Patterns of PM 2.5 Pollution in Three Zones in China. Journal of Cleaner Production.

Anomaly detection

Rahman, Anisur, et al. "Finding Anomalies in SCADA Logs Using Rare Sequential Pattern Mining." International Conference on Network and System Security. Springer International Publishing, 2016.

Authorship attribution

Pokou J. M., Fournier-Viger, P., Moghrabi, C. (2016). Authorship Attribution Using Small Sets of Frequent Part-of-Speech Skip-grams. Proc. 29th Intern. Florida Artificial Intelligence Research Society Conference (FLAIRS 29), AAAI Press, pp. 86-91

Video games

Leece, M., Jhala, A. (2014). Sequential Pattern Mining in StarCraft: Brood War for Short and Long-Term Goals. Proc. Workshop on Adversarial Real-Time Strategy Games at AIIDE Conference. AAAI Press.

Restaurant recommendation

Han, M., Wang, Z., Yuan, J. (2013). Mining Constraint Based Sequential Patterns and Rules on Restaurant Recommendation System. Journal of Computational Information Systems, 9(10): 3901-3908.

Conclusion

In this blog post, I have introduced some representative applications of sequential pattern mining. Hope that this has been interesting. If you want to know more about sequential pattern mining, you can check my survey of sequential pattern mining and my video introduction to sequential pattern mining (24 minutes). Also, you can check out my free online pattern mining course and the SPMF open-source software, which offers hundreds of pattern mining algorithms.

—

Philippe Fournier-Viger is a professor of Computer Science and also the founder of the open-source data mining software SPMF, offering more than 120 data mining algorithms.